社区日报 第485期(2018-12-21)

http://t.cn/EUu5RI3

2、Elasticsearch7.0计算向量距离新特性抢先看

http://t.cn/EUB4vbo

3、Kubernetes上部署高可用和可扩展的Elasticsearch

http://t.cn/ELhogLr

编辑:铭毅天下

归档:https://elasticsearch.cn/article/6214

订阅:https://tinyletter.com/elastic-daily

http://t.cn/EUu5RI3

2、Elasticsearch7.0计算向量距离新特性抢先看

http://t.cn/EUB4vbo

3、Kubernetes上部署高可用和可扩展的Elasticsearch

http://t.cn/ELhogLr

编辑:铭毅天下

归档:https://elasticsearch.cn/article/6214

订阅:https://tinyletter.com/elastic-daily 收起阅读 »

Day 21 - ECE 版本升级扫雷实战

下面分享的这个案例是当我们在把集群从5.4.1 升级到5.6.12 的过程中,遇到节点关闭受阻,升级不完整等情景。以及对应的处理方法。

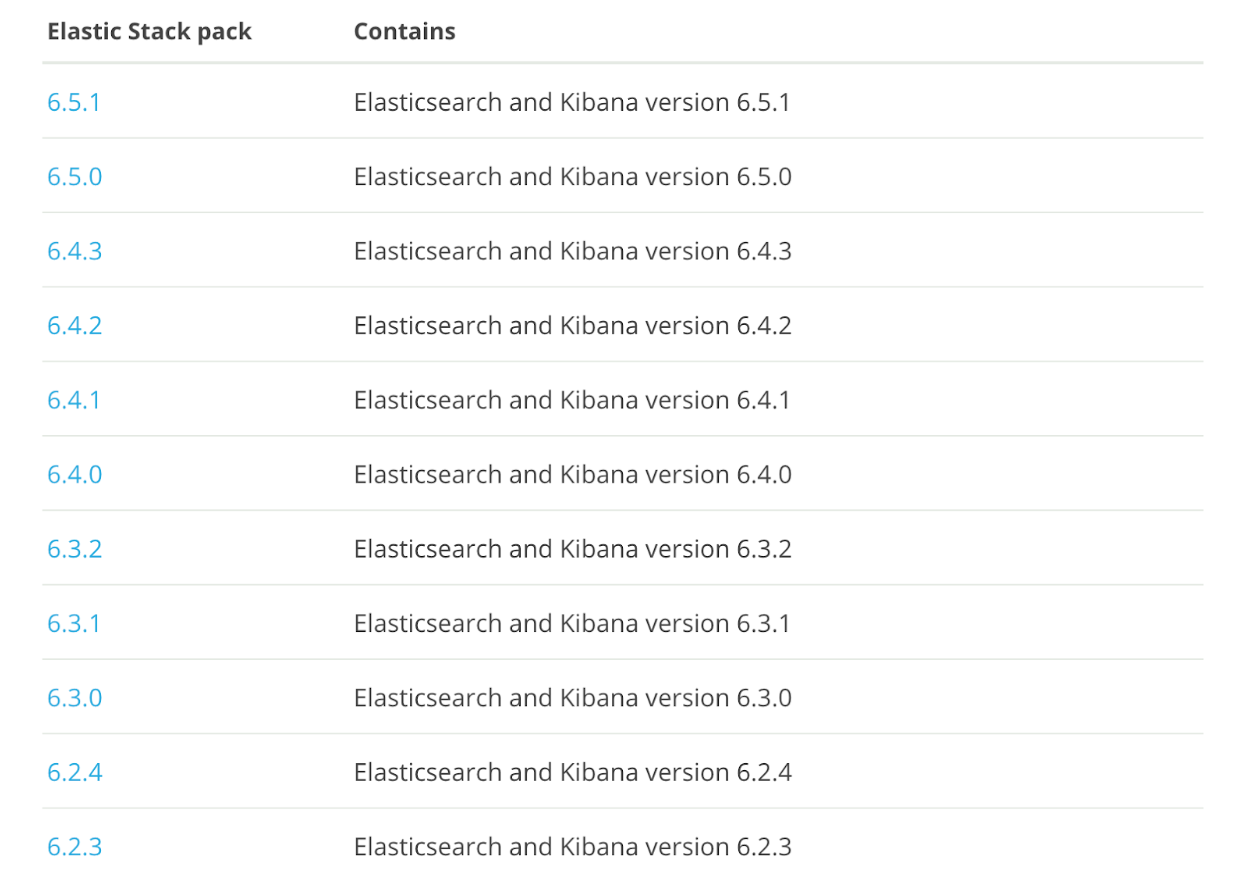

首先在ECE中,版本是通过stack的方式管理

Ref : https://www.elastic.co/guide/e ... .html

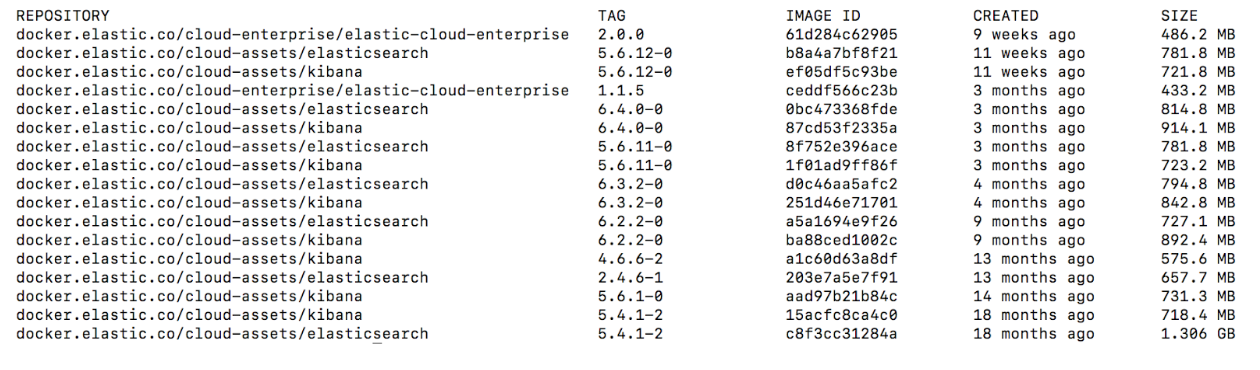

这些版本都是以docker images的形式存储

因此,ECE根据不同的版本,然后选择对应的docker image就可以创建一个节点了. 那么升级的过程就可以简单分成几个步骤

1.exclude准备升级的节点。

2.停止节点ES进程,更换container 版本。

3.重新启动节点,加入集群。

4.在其他节点上重复以上流程。

在这个过程中, 实际使用的时候发现有一些需要注意的雷区.

扫雷一:使用UI触发升级,必须保证集群没有自定义插件和bundles

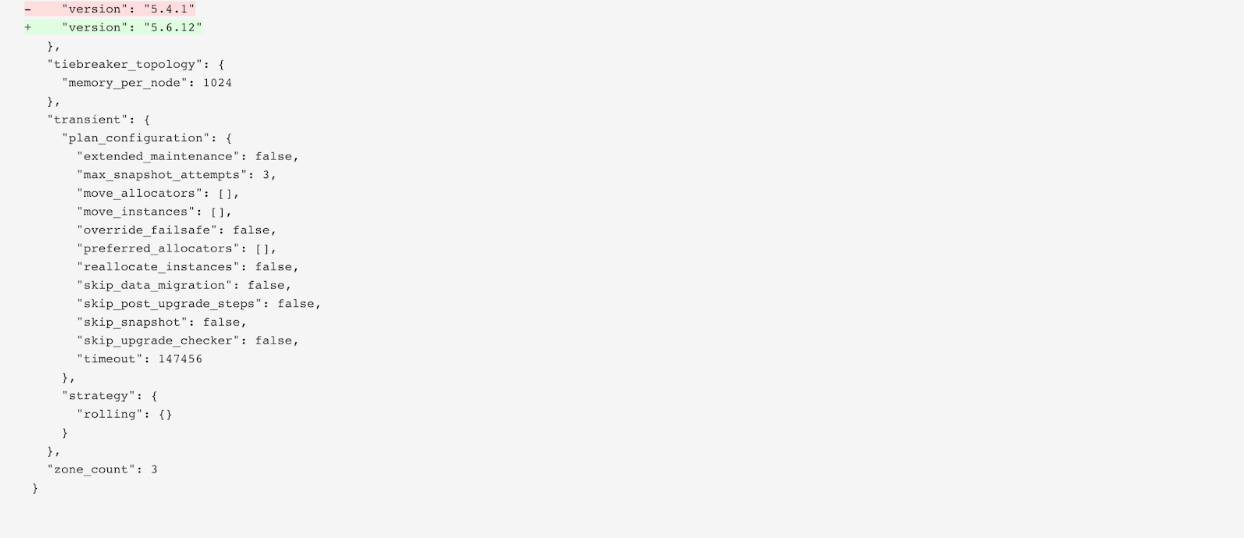

ECE 里面的集群操作是通过plan来控制的

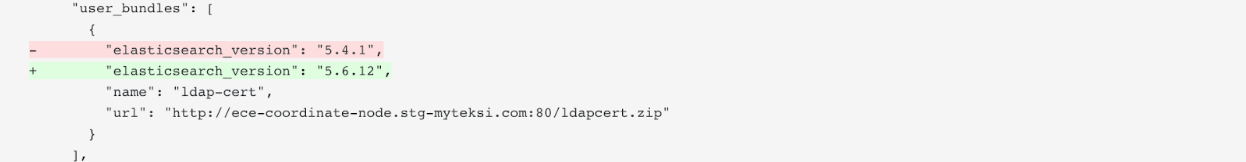

任何的集群操作最终都会生成一个plan的diff。如上图,把集群从5.4.1 升级到 5.6.12 会产生以上diff.

正常情况下是没有问题的.

如果集群配置了自定义bundles, 比如LDAP bundles, ref:https://www.elastic.co/guide/e ... .html

那么在集群的plan里面就会存在这么一段配置

那么当我们在按下升级按钮的时候

ECE 只在plan中修改了集群大版本的配置, 但是并没有修改自定义bundles中的版本号(仍然是5.4.1).

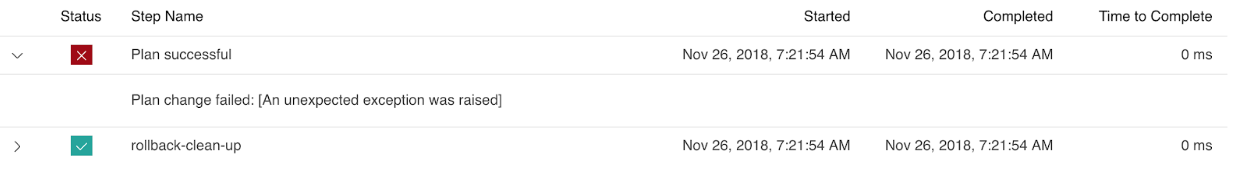

在这种情况下去执行升级,会直接产生报错.

界面上没有显示原因, 但是这是因为plan里面大版本和bundle中的版本不一致,然后会导致新增的节点无法启动. 于是ECE 就认为集群升级失败了.

解决方法是手动编辑plan,把自定义bundles中的版本号改成和集群版本一致

然后使用ECE 提供的一种手动使用plan进行集群升级的方式进行升级.

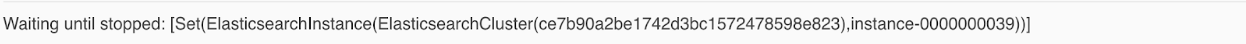

扫雷二:节点无法关闭

ECE 控制container 是通过一个叫做 constructor的服务。constructor 通过接收集群的更改需求,制定具体的更改计划与步骤,指导allocator对container进行操作。同时也负责保证集群高可靠性,通过Availability Zone的数量在不同的AZ上面部署节点。

当Allocator接受到关闭container命令的时候,会尝试去关闭container,如果container处于一个阻塞状态无法响应, 那么关闭命令无法执行成功。这个时候constructor会等待节点关闭,但是allocator又认为节点已经接受到关闭命令了。又或者constructor发送给allocator的过程中网络丢包, 这个时候allocator 没有正确接受关闭container的命令. 整个升级进程就卡住了。 这种情况十分罕见,通常发现一个container如果处于”正在关闭”时间太久了, 那么通常就是中间的通信出现问题了.

解决办法是可以通过手动停止container, 在对应的allocator上面找到container,使用

docker stop <container_name>

停止container,这样可以出发allocator更新container的健康状态,上报这个container已经关闭了, 从而打通流程并执行下一步。

扫雷三: 多版本并存

如果使用上面的方式强行关闭docker container, 虽然可以让升级进程继续进行下去. 但是被手动关闭的节点会保留原来的版本。于是在升级后查看各个节点的版本,会发现部分节点是5.4.1, 部分是5.6.12.

因为节点是强制关闭的, ECE直接认为节点已经完成升级,并重新启动这个container. 而在这个处理中,跳过了升级docker image的一步.

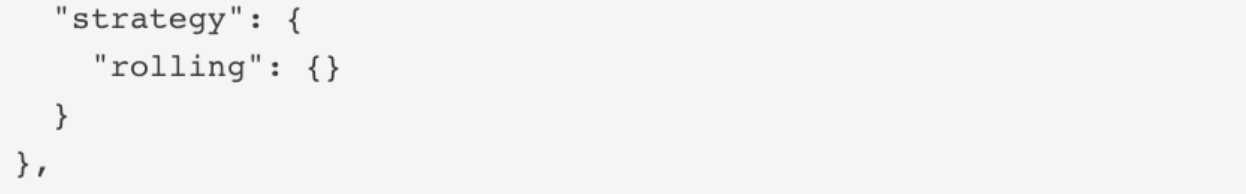

为什么不是生成一个新的container呢? 因为从plan里面可以看到

在默认情况下, ECE 处理版本升级是使用rolling 策略 Ref: https://www.elastic.co/guide/e ... .html

在这个策略下,ECE会停止当前container并直接修改重启。

如果ECE集群容量允许, 可以改成grow_and_shrink 策略, 这样ECE 会创建新的container并且销毁旧的container, 避免集群出现多版本.

如果出现了多版本的集群,可以通过更改集群任意一个配置来触发 grow_and_shrink 同样可以使到版本回归一致.

总结来说ECE 在版本升级方面还是有很多需要改进的地方. 对于ECE用户再说在使用ECE的版本升级功能的时候主要有以下建议

1. 自己学会手动修改plan. 这也是每一个ECE support engineer 都会干的事情.

2.如果集群容量允许,尽量使用 grow_and_shrink的策略来进行集群操作.

下面分享的这个案例是当我们在把集群从5.4.1 升级到5.6.12 的过程中,遇到节点关闭受阻,升级不完整等情景。以及对应的处理方法。

首先在ECE中,版本是通过stack的方式管理

Ref : https://www.elastic.co/guide/e ... .html

这些版本都是以docker images的形式存储

因此,ECE根据不同的版本,然后选择对应的docker image就可以创建一个节点了. 那么升级的过程就可以简单分成几个步骤

1.exclude准备升级的节点。

2.停止节点ES进程,更换container 版本。

3.重新启动节点,加入集群。

4.在其他节点上重复以上流程。

在这个过程中, 实际使用的时候发现有一些需要注意的雷区.

扫雷一:使用UI触发升级,必须保证集群没有自定义插件和bundles

ECE 里面的集群操作是通过plan来控制的

任何的集群操作最终都会生成一个plan的diff。如上图,把集群从5.4.1 升级到 5.6.12 会产生以上diff.

正常情况下是没有问题的.

如果集群配置了自定义bundles, 比如LDAP bundles, ref:https://www.elastic.co/guide/e ... .html

那么在集群的plan里面就会存在这么一段配置

那么当我们在按下升级按钮的时候

ECE 只在plan中修改了集群大版本的配置, 但是并没有修改自定义bundles中的版本号(仍然是5.4.1).

在这种情况下去执行升级,会直接产生报错.

界面上没有显示原因, 但是这是因为plan里面大版本和bundle中的版本不一致,然后会导致新增的节点无法启动. 于是ECE 就认为集群升级失败了.

解决方法是手动编辑plan,把自定义bundles中的版本号改成和集群版本一致

然后使用ECE 提供的一种手动使用plan进行集群升级的方式进行升级.

扫雷二:节点无法关闭

ECE 控制container 是通过一个叫做 constructor的服务。constructor 通过接收集群的更改需求,制定具体的更改计划与步骤,指导allocator对container进行操作。同时也负责保证集群高可靠性,通过Availability Zone的数量在不同的AZ上面部署节点。

当Allocator接受到关闭container命令的时候,会尝试去关闭container,如果container处于一个阻塞状态无法响应, 那么关闭命令无法执行成功。这个时候constructor会等待节点关闭,但是allocator又认为节点已经接受到关闭命令了。又或者constructor发送给allocator的过程中网络丢包, 这个时候allocator 没有正确接受关闭container的命令. 整个升级进程就卡住了。 这种情况十分罕见,通常发现一个container如果处于”正在关闭”时间太久了, 那么通常就是中间的通信出现问题了.

解决办法是可以通过手动停止container, 在对应的allocator上面找到container,使用

docker stop <container_name>

停止container,这样可以出发allocator更新container的健康状态,上报这个container已经关闭了, 从而打通流程并执行下一步。

扫雷三: 多版本并存

如果使用上面的方式强行关闭docker container, 虽然可以让升级进程继续进行下去. 但是被手动关闭的节点会保留原来的版本。于是在升级后查看各个节点的版本,会发现部分节点是5.4.1, 部分是5.6.12.

因为节点是强制关闭的, ECE直接认为节点已经完成升级,并重新启动这个container. 而在这个处理中,跳过了升级docker image的一步.

为什么不是生成一个新的container呢? 因为从plan里面可以看到

在默认情况下, ECE 处理版本升级是使用rolling 策略 Ref: https://www.elastic.co/guide/e ... .html

在这个策略下,ECE会停止当前container并直接修改重启。

如果ECE集群容量允许, 可以改成grow_and_shrink 策略, 这样ECE 会创建新的container并且销毁旧的container, 避免集群出现多版本.

如果出现了多版本的集群,可以通过更改集群任意一个配置来触发 grow_and_shrink 同样可以使到版本回归一致.

总结来说ECE 在版本升级方面还是有很多需要改进的地方. 对于ECE用户再说在使用ECE的版本升级功能的时候主要有以下建议

1. 自己学会手动修改plan. 这也是每一个ECE support engineer 都会干的事情.

2.如果集群容量允许,尽量使用 grow_and_shrink的策略来进行集群操作.

收起阅读 »

社区日报 第484期 (2018-12-20)

http://t.cn/E45chlT

2.有赞全链路压测实战

http://t.cn/E45cViJ

3.Elasticsearch bool query小结

http://t.cn/E45cKEi

编辑:金桥

归档:https://elasticsearch.cn/article/6212

订阅:https://tinyletter.com/elastic-daily

http://t.cn/E45chlT

2.有赞全链路压测实战

http://t.cn/E45cViJ

3.Elasticsearch bool query小结

http://t.cn/E45cKEi

编辑:金桥

归档:https://elasticsearch.cn/article/6212

订阅:https://tinyletter.com/elastic-daily 收起阅读 »

Day 20 - Elastic性能实战指南

让Elasticsearch飞起来!——性能优化实践干货

0、题记

Elasticsearch性能优化的最终目的:用户体验爽。

关于爽的定义——著名产品人梁宁曾经说过“人在满足时候的状态叫做愉悦,人不被满足就会难受,就会开始寻求。如果这个人在寻求中,能立刻得到即时满足,这种感觉就是爽!”。

Elasticsearch的爽点就是:快、准、全!

关于Elasticsearch性能优化,阿里、腾讯、京东、携程、滴滴、58等都有过很多深入的实践总结,都是非常好的参考。本文换一个思路,基于Elasticsearch的爽点,进行性能优化相关探讨。

1、集群规划优化实践

1.1 基于目标数据量规划集群

在业务初期,经常被问到的问题,要几个节点的集群,内存、CPU要多大,要不要SSD?

最主要的考虑点是:你的目标存储数据量是多大?可以针对目标数据量反推节点多少。

1.2 要留出容量Buffer

注意:Elasticsearch有三个警戒水位线,磁盘使用率达到85%、90%、95%。

不同警戒水位线会有不同的应急处理策略。

这点,磁盘容量选型中要规划在内。控制在85%之下是合理的。

当然,也可以通过配置做调整。

1.3 ES集群各节点尽量不要和其他业务功能复用一台机器。

除非内存非常大。 举例:普通服务器,安装了ES+Mysql+redis,业务数据量大了之后,势必会出现内存不足等问题。

1.4 磁盘尽量选择SSD

Elasticsearch官方文档肯定推荐SSD,考虑到成本的原因。需要结合业务场景,

如果业务对写入、检索速率有较高的速率要求,建议使用SSD磁盘。

阿里的业务场景,SSD磁盘比机械硬盘的速率提升了5倍。

但要因业务场景而异。

1.5 内存配置要合理

官方建议:堆内存的大小是官方建议是:Min(32GB,机器内存大小/2)。

Medcl和wood大叔都有明确说过,不必要设置32/31GB那么大,建议:热数据设置:26GB,冷数据:31GB。

总体内存大小没有具体要求,但肯定是内容越大,检索性能越好。

经验值供参考:每天200GB+增量数据的业务场景,服务器至少要64GB内存。

除了JVM之外的预留内存要充足,否则也会经常OOM。

1.6 CPU核数不要太小

CPU核数是和ESThread pool关联的。和写入、检索性能都有关联。

建议:16核+。

1.7 超大量级的业务场景,可以考虑跨集群检索

除非业务量级非常大,例如:滴滴、携程的PB+的业务场景,否则基本不太需要跨集群检索。

1.8 集群节点个数无需奇数

ES内部维护集群通信,不是基于zookeeper的分发部署机制,所以,无需奇数。

但是discovery.zen.minimum_master_nodes的值要设置为:候选主节点的个数/2+1,才能有效避免脑裂。

1.9 节点类型优化分配

集群节点数:<=3,建议:所有节点的master:true, data:true。既是主节点也是路由节点。 集群节点数:>3, 根据业务场景需要,建议:逐步独立出Master节点和协调/路由节点。

1.10 建议冷热数据分离

热数据存储SSD和普通历史数据存储机械磁盘,物理上提高检索效率。

2、索引优化实践

Mysql等关系型数据库要分库、分表。Elasticserach的话也要做好充分的考虑。

2.1 设置多少个索引?

建议根据业务场景进行存储。

不同通道类型的数据要分索引存储。举例:知乎采集信息存储到知乎索引;APP采集信息存储到APP索引。

2.2 设置多少分片?

建议根据数据量衡量。

经验值:建议每个分片大小不要超过30GB。

2.3 分片数设置?

建议根据集群节点的个数规模,分片个数建议>=集群节点的个数。

5节点的集群,5个分片就比较合理。

注意:除非reindex操作,分片数是不可以修改的。

2.4副本数设置?

除非你对系统的健壮性有异常高的要求,比如:银行系统。可以考虑2个副本以上。

否则,1个副本足够。

注意:副本数是可以通过配置随时修改的。

2.5不要再在一个索引下创建多个type

即便你是5.X版本,考虑到未来版本升级等后续的可扩展性。 建议:一个索引对应一个type。6.x默认对应_doc,5.x你就直接对应type统一为doc。

2.6 按照日期规划索引

随着业务量的增加,单一索引和数据量激增给的矛盾凸显。

按照日期规划索引是必然选择。

好处1:可以实现历史数据秒删。很对历史索引delete即可。注意:一个索引的话需要借助delete_by_query+force_merge操作,慢且删除不彻底。

好处2:便于冷热数据分开管理,检索最近几天的数据,直接物理上指定对应日期的索引,速度快的一逼!

操作参考:模板使用+rollover API使用。

2.7 务必使用别名

ES不像mysql方面的更改索引名称。使用别名就是一个相对灵活的选择。

3、数据模型优化实践

3.1 不要使用默认的Mapping

默认Mapping的字段类型是系统自动识别的。其中:string类型默认分成:text和keyword两种类型。如果你的业务中不需要分词、检索,仅需要精确匹配,仅设置为keyword即可。

根据业务需要选择合适的类型,有利于节省空间和提升精度,如:浮点型的选择。

3.2 Mapping各字段的选型流程

3.3 选择合理的分词器

常见的开源中文分词器包括:ik分词器、ansj分词器、hanlp分词器、结巴分词器、海量分词器、“ElasticSearch最全分词器比较及使用方法” 搜索可查看对比效果。 如果选择ik,建议使用ik_max_word。因为:粗粒度的分词结果基本包含细粒度ik_smart的结果。

3.4 date、long、还是keyword

根据业务需要,如果需要基于时间轴做分析,必须date类型;

如果仅需要秒级返回,建议使用keyword。

4、数据写入优化实践

4.1 要不要秒级响应?

Elasticsearch近实时的本质是:最快1s写入的数据可以被查询到。

如果refresh_interval设置为1s,势必会产生大量的segment,检索性能会受到影响。

所以,非实时的场景可以调大,设置为30s,甚至-1。

4.2 减少副本,提升写入性能。

写入前,副本数设置为0, 写入后,副本数设置为原来值。

4.3 能批量就不单条写入

批量接口为bulk,批量的大小要结合队列的大小,而队列大小和线程池大小、机器的cpu核数。

4.4 禁用swap

在Linux系统上,通过运行以下命令临时禁用交换:

sudo swapoff -a5、检索聚合优化实战

5.1 禁用 wildcard模糊匹配

数据量级达到TB+甚至更高之后,wildcard在多字段组合的情况下很容易出现卡死,甚至导致集群节点崩溃宕机的情况。

后果不堪设想。

替代方案:

方案一:针对精确度要求高的方案:两套分词器结合,standard和ik结合,使用match_phrase检索。

方案二:针对精确度要求不高的替代方案:建议ik分词,通过match_phrase和slop结合查询。

5.2极小的概率使用match匹配

中文match匹配显然结果是不准确的。很大的业务场景会使用短语匹配“match_phrase"。

match_phrase结合合理的分词词典、词库,会使得搜索结果精确度更高,避免噪音数据。

5.3 结合业务场景,大量使用filter过滤器

对于不需要使用计算相关度评分的场景,无疑filter缓存机制会使得检索更快。

举例:过滤某邮编号码。

5.3控制返回字段和结果

和mysql查询一样,业务开发中,select * 操作几乎是不必须的。

同理,ES中,_source 返回全部字段也是非必须的。

要通过_source 控制字段的返回,只返回业务相关的字段。

网页正文content,网页快照html_content类似字段的批量返回,可能就是业务上的设计缺陷。

显然,摘要字段应该提前写入,而不是查询content后再截取处理。

5.4 分页深度查询和遍历

分页查询使用:from+size;

遍历使用:scroll;

并行遍历使用:scroll+slice。

斟酌集合业务选型使用。

5.5 聚合Size的合理设置

聚合结果是不精确的。除非你设置size为2的32次幂-1,否则聚合的结果是取每个分片的Top size元素后综合排序后的值。

实际业务场景要求精确反馈结果的要注意。

尽量不要获取全量聚合结果——从业务层面取TopN聚合结果值是非常合理的。因为的确排序靠后的结果值意义不大。

5.6 聚合分页合理实现

聚合结果展示的时,势必面临聚合后分页的问题,而ES官方基于性能原因不支持聚合后分页。

如果需要聚合后分页,需要自开发实现。包含但不限于:

方案一:每次取聚合结果,拿到内存中分页返回。

方案二:scroll结合scroll after集合redis实现。

6、业务优化

让Elasticsearch做它擅长的事情,很显然,它更擅长基于倒排索引进行搜索。

业务层面,用户想最快速度看到自己想要的结果,中间的“字段处理、格式化、标准化”等一堆操作,用户是不关注的。

为了让Elasticsearch更高效的检索,建议:

1)要做足“前戏”

字段抽取、倾向性分析、分类/聚类、相关性判定放在写入ES之前的ETL阶段进行;

2)“睡服”产品经理

产品经理基于各种奇葩业务场景可能会提各种无理需求。

作为技术人员,要“通知以情晓之以理”,给产品经理讲解明白搜索引擎的原理、Elasticsearch的原理,哪些能做,哪些真的“臣妾做不到”。

7、小结

实际业务开发中,公司一般要求又想马儿不吃草,又想马儿飞快跑。

对于Elasticsearch开发也是,硬件资源不足(cpu、内存、磁盘都爆满)几乎没有办法提升性能的。

除了检索聚合,让Elasticsearch做N多相关、不相干的工作,然后得出结论“Elastic也就那样慢,没有想像的快”。

你脑海中是否也有类似的场景浮现呢?

提供相对NB的硬件资源、做好前期的各种准备工作、让Elasticsearch轻装上阵,相信你的Elasticsearch也会飞起来!

来日我们再相会......

推荐阅读: 1、阿里:https://elasticsearch.cn/article/6171 2、滴滴:http://t.cn/EUNLkNU 3、腾讯:http://t.cn/E4y9ylL 4、携程:https://elasticsearch.cn/article/6205 5、社区:https://elasticsearch.cn/article/6202 6、社区:https://elasticsearch.cn/article/708 7、社区:https://elasticsearch.cn/article/6202

Elasticsearch基础、进阶、实战第一公众号

让Elasticsearch飞起来!——性能优化实践干货

0、题记

Elasticsearch性能优化的最终目的:用户体验爽。

关于爽的定义——著名产品人梁宁曾经说过“人在满足时候的状态叫做愉悦,人不被满足就会难受,就会开始寻求。如果这个人在寻求中,能立刻得到即时满足,这种感觉就是爽!”。

Elasticsearch的爽点就是:快、准、全!

关于Elasticsearch性能优化,阿里、腾讯、京东、携程、滴滴、58等都有过很多深入的实践总结,都是非常好的参考。本文换一个思路,基于Elasticsearch的爽点,进行性能优化相关探讨。

1、集群规划优化实践

1.1 基于目标数据量规划集群

在业务初期,经常被问到的问题,要几个节点的集群,内存、CPU要多大,要不要SSD?

最主要的考虑点是:你的目标存储数据量是多大?可以针对目标数据量反推节点多少。

1.2 要留出容量Buffer

注意:Elasticsearch有三个警戒水位线,磁盘使用率达到85%、90%、95%。

不同警戒水位线会有不同的应急处理策略。

这点,磁盘容量选型中要规划在内。控制在85%之下是合理的。

当然,也可以通过配置做调整。

1.3 ES集群各节点尽量不要和其他业务功能复用一台机器。

除非内存非常大。 举例:普通服务器,安装了ES+Mysql+redis,业务数据量大了之后,势必会出现内存不足等问题。

1.4 磁盘尽量选择SSD

Elasticsearch官方文档肯定推荐SSD,考虑到成本的原因。需要结合业务场景,

如果业务对写入、检索速率有较高的速率要求,建议使用SSD磁盘。

阿里的业务场景,SSD磁盘比机械硬盘的速率提升了5倍。

但要因业务场景而异。

1.5 内存配置要合理

官方建议:堆内存的大小是官方建议是:Min(32GB,机器内存大小/2)。

Medcl和wood大叔都有明确说过,不必要设置32/31GB那么大,建议:热数据设置:26GB,冷数据:31GB。

总体内存大小没有具体要求,但肯定是内容越大,检索性能越好。

经验值供参考:每天200GB+增量数据的业务场景,服务器至少要64GB内存。

除了JVM之外的预留内存要充足,否则也会经常OOM。

1.6 CPU核数不要太小

CPU核数是和ESThread pool关联的。和写入、检索性能都有关联。

建议:16核+。

1.7 超大量级的业务场景,可以考虑跨集群检索

除非业务量级非常大,例如:滴滴、携程的PB+的业务场景,否则基本不太需要跨集群检索。

1.8 集群节点个数无需奇数

ES内部维护集群通信,不是基于zookeeper的分发部署机制,所以,无需奇数。

但是discovery.zen.minimum_master_nodes的值要设置为:候选主节点的个数/2+1,才能有效避免脑裂。

1.9 节点类型优化分配

集群节点数:<=3,建议:所有节点的master:true, data:true。既是主节点也是路由节点。 集群节点数:>3, 根据业务场景需要,建议:逐步独立出Master节点和协调/路由节点。

1.10 建议冷热数据分离

热数据存储SSD和普通历史数据存储机械磁盘,物理上提高检索效率。

2、索引优化实践

Mysql等关系型数据库要分库、分表。Elasticserach的话也要做好充分的考虑。

2.1 设置多少个索引?

建议根据业务场景进行存储。

不同通道类型的数据要分索引存储。举例:知乎采集信息存储到知乎索引;APP采集信息存储到APP索引。

2.2 设置多少分片?

建议根据数据量衡量。

经验值:建议每个分片大小不要超过30GB。

2.3 分片数设置?

建议根据集群节点的个数规模,分片个数建议>=集群节点的个数。

5节点的集群,5个分片就比较合理。

注意:除非reindex操作,分片数是不可以修改的。

2.4副本数设置?

除非你对系统的健壮性有异常高的要求,比如:银行系统。可以考虑2个副本以上。

否则,1个副本足够。

注意:副本数是可以通过配置随时修改的。

2.5不要再在一个索引下创建多个type

即便你是5.X版本,考虑到未来版本升级等后续的可扩展性。 建议:一个索引对应一个type。6.x默认对应_doc,5.x你就直接对应type统一为doc。

2.6 按照日期规划索引

随着业务量的增加,单一索引和数据量激增给的矛盾凸显。

按照日期规划索引是必然选择。

好处1:可以实现历史数据秒删。很对历史索引delete即可。注意:一个索引的话需要借助delete_by_query+force_merge操作,慢且删除不彻底。

好处2:便于冷热数据分开管理,检索最近几天的数据,直接物理上指定对应日期的索引,速度快的一逼!

操作参考:模板使用+rollover API使用。

2.7 务必使用别名

ES不像mysql方面的更改索引名称。使用别名就是一个相对灵活的选择。

3、数据模型优化实践

3.1 不要使用默认的Mapping

默认Mapping的字段类型是系统自动识别的。其中:string类型默认分成:text和keyword两种类型。如果你的业务中不需要分词、检索,仅需要精确匹配,仅设置为keyword即可。

根据业务需要选择合适的类型,有利于节省空间和提升精度,如:浮点型的选择。

3.2 Mapping各字段的选型流程

3.3 选择合理的分词器

常见的开源中文分词器包括:ik分词器、ansj分词器、hanlp分词器、结巴分词器、海量分词器、“ElasticSearch最全分词器比较及使用方法” 搜索可查看对比效果。 如果选择ik,建议使用ik_max_word。因为:粗粒度的分词结果基本包含细粒度ik_smart的结果。

3.4 date、long、还是keyword

根据业务需要,如果需要基于时间轴做分析,必须date类型;

如果仅需要秒级返回,建议使用keyword。

4、数据写入优化实践

4.1 要不要秒级响应?

Elasticsearch近实时的本质是:最快1s写入的数据可以被查询到。

如果refresh_interval设置为1s,势必会产生大量的segment,检索性能会受到影响。

所以,非实时的场景可以调大,设置为30s,甚至-1。

4.2 减少副本,提升写入性能。

写入前,副本数设置为0, 写入后,副本数设置为原来值。

4.3 能批量就不单条写入

批量接口为bulk,批量的大小要结合队列的大小,而队列大小和线程池大小、机器的cpu核数。

4.4 禁用swap

在Linux系统上,通过运行以下命令临时禁用交换:

sudo swapoff -a5、检索聚合优化实战

5.1 禁用 wildcard模糊匹配

数据量级达到TB+甚至更高之后,wildcard在多字段组合的情况下很容易出现卡死,甚至导致集群节点崩溃宕机的情况。

后果不堪设想。

替代方案:

方案一:针对精确度要求高的方案:两套分词器结合,standard和ik结合,使用match_phrase检索。

方案二:针对精确度要求不高的替代方案:建议ik分词,通过match_phrase和slop结合查询。

5.2极小的概率使用match匹配

中文match匹配显然结果是不准确的。很大的业务场景会使用短语匹配“match_phrase"。

match_phrase结合合理的分词词典、词库,会使得搜索结果精确度更高,避免噪音数据。

5.3 结合业务场景,大量使用filter过滤器

对于不需要使用计算相关度评分的场景,无疑filter缓存机制会使得检索更快。

举例:过滤某邮编号码。

5.3控制返回字段和结果

和mysql查询一样,业务开发中,select * 操作几乎是不必须的。

同理,ES中,_source 返回全部字段也是非必须的。

要通过_source 控制字段的返回,只返回业务相关的字段。

网页正文content,网页快照html_content类似字段的批量返回,可能就是业务上的设计缺陷。

显然,摘要字段应该提前写入,而不是查询content后再截取处理。

5.4 分页深度查询和遍历

分页查询使用:from+size;

遍历使用:scroll;

并行遍历使用:scroll+slice。

斟酌集合业务选型使用。

5.5 聚合Size的合理设置

聚合结果是不精确的。除非你设置size为2的32次幂-1,否则聚合的结果是取每个分片的Top size元素后综合排序后的值。

实际业务场景要求精确反馈结果的要注意。

尽量不要获取全量聚合结果——从业务层面取TopN聚合结果值是非常合理的。因为的确排序靠后的结果值意义不大。

5.6 聚合分页合理实现

聚合结果展示的时,势必面临聚合后分页的问题,而ES官方基于性能原因不支持聚合后分页。

如果需要聚合后分页,需要自开发实现。包含但不限于:

方案一:每次取聚合结果,拿到内存中分页返回。

方案二:scroll结合scroll after集合redis实现。

6、业务优化

让Elasticsearch做它擅长的事情,很显然,它更擅长基于倒排索引进行搜索。

业务层面,用户想最快速度看到自己想要的结果,中间的“字段处理、格式化、标准化”等一堆操作,用户是不关注的。

为了让Elasticsearch更高效的检索,建议:

1)要做足“前戏”

字段抽取、倾向性分析、分类/聚类、相关性判定放在写入ES之前的ETL阶段进行;

2)“睡服”产品经理

产品经理基于各种奇葩业务场景可能会提各种无理需求。

作为技术人员,要“通知以情晓之以理”,给产品经理讲解明白搜索引擎的原理、Elasticsearch的原理,哪些能做,哪些真的“臣妾做不到”。

7、小结

实际业务开发中,公司一般要求又想马儿不吃草,又想马儿飞快跑。

对于Elasticsearch开发也是,硬件资源不足(cpu、内存、磁盘都爆满)几乎没有办法提升性能的。

除了检索聚合,让Elasticsearch做N多相关、不相干的工作,然后得出结论“Elastic也就那样慢,没有想像的快”。

你脑海中是否也有类似的场景浮现呢?

提供相对NB的硬件资源、做好前期的各种准备工作、让Elasticsearch轻装上阵,相信你的Elasticsearch也会飞起来!

来日我们再相会......

推荐阅读: 1、阿里:https://elasticsearch.cn/article/6171 2、滴滴:http://t.cn/EUNLkNU 3、腾讯:http://t.cn/E4y9ylL 4、携程:https://elasticsearch.cn/article/6205 5、社区:https://elasticsearch.cn/article/6202 6、社区:https://elasticsearch.cn/article/708 7、社区:https://elasticsearch.cn/article/6202

Elasticsearch基础、进阶、实战第一公众号

收起阅读 »社区日报 第483期 (2018-12-19)

http://t.cn/E421UUE

2、(自备梯子)如何使用es和react构建电子商务搜索。

http://t.cn/E42BSad

3、Elastic:Beyond Search!

http://t.cn/E42dIzz

编辑:wt

归档:https://elasticsearch.cn/article/6210

订阅:https://tinyletter.com/elastic-daily

http://t.cn/E421UUE

2、(自备梯子)如何使用es和react构建电子商务搜索。

http://t.cn/E42BSad

3、Elastic:Beyond Search!

http://t.cn/E42dIzz

编辑:wt

归档:https://elasticsearch.cn/article/6210

订阅:https://tinyletter.com/elastic-daily 收起阅读 »

Elastic Stack 6.5 最新功能

这里也有一个围绕这些特性的电台访谈节目:

https://www.ximalaya.com/keji/14965410/139462151

下载地址:

https://www.elastic.co/downloads

这里也有一个围绕这些特性的电台访谈节目:

https://www.ximalaya.com/keji/14965410/139462151

下载地址:

https://www.elastic.co/downloads 收起阅读 »

Day 19 - 通过点击反馈优化es搜索结果排序

demo的下载地址如下,都是python脚本,环境需求:python3+,es

https://github.com/o19s/elasti ... /demo

1.准备数据

python prepare.py

下载RankLib.jar (用来训练模型) 和tmdb.json (测试数据集,tmdb的电影数据)

2.导测试数据入es

python index_ml_tmdb.py

3.训练模型

python train.py

训练脚本很简单,但是脚本里面有丰富的实现,下面介绍下主要方法。

load_features(FEATURE_SET_NAME)

这个是读取特征信息,demo定义了两个特征,分别在1.json

{

"query": {

"match": {

"title": "{{keywords}}"

}

}

}{

"query": {

"match": {

"overview": "{{keywords}}"

}

}

}movieJudgments = judgments_by_qid(judgments_from_file(filename=JUDGMENTS_FILE))4 qid:1 # 7555 Rambo

3 qid:1 # 1370 Rambo III

3 qid:1 # 1369 Rambo: First Blood Part II

3 qid:1 # 1368 First Blood

0 qid:1 # 136278 Blood

4 qid:2 # 1366 Rocky

3 qid:2 # 1246 Rocky Balboa

3 qid:2 # 60375 Rocky VI

3 qid:2 # 1371 Rocky III

3 qid:2 # 1375 Rocky V

log_features(es, judgments_dict=movieJudgments, search_index=INDEX_NAME)

build_features_judgments_file(movieJudgments, filename=JUDGMENTS_FILE_FEATURES)生成完的特征集如下:

4 qid:1 1:12.318446 2:10.573845 # 7555 rambo

3 qid:1 1:10.357836 2:11.950331 # 1370 rambo

3 qid:1 1:7.0104666 2:11.220029 # 1369 rambo

3 qid:1 1:0.0 2:11.220029 # 1368 rambo

0 qid:1 1:0.0 2:0.0 # 136278 rambo

4 qid:2 1:10.686367 2:8.814796 # 1366 rocky

3 qid:2 1:8.985519 2:9.984467 # 1246 rocky

3 qid:2 1:8.985519 2:8.067647 # 60375 rocky

3 qid:2 1:8.985519 2:5.6604943 # 1371 rocky

3 qid:2 1:8.985519 2:7.3007236 # 1375 rocky

特征集出来后就是训练了,demo提供10总不同的算法,训练好之后把结果传到es提供服务

for modelType in [0, 1, 2, 3, 4, 5, 6, 7, 8, 9]:

# 0, MART

# 1, RankNet

# 2, RankBoost

# 3, AdaRank

# 4, coord Ascent

# 6, LambdaMART

# 7, ListNET

# 8, Random Forests

# 9, Linear Regression

Logger.logger.info("*** Training %s " % modelType)

train_model(judgments_with_features_file=JUDGMENTS_FILE_FEATURES, model_output='model.txt',

which_model=modelType)

save_model(script_name="test_%s" % modelType, feature_set=FEATURE_SET_NAME, model_fname='model.txt')python search.py Rambo

搜索时主要用到了es里面的rescore特性,就是对前面topn条记录根据模型进行再排序,查询dsl如下:

{

"query": {

"multi_match": {

"query": "Rambo",

"fields": ["title", "overview"]

}

},

"rescore": {

"query": {

"rescore_query": {

"sltr": {

"params": {

"keywords": "Rambo"

},

"model": "test_1",

}

}

}

}

}得到结果

Rambo

Rambo III

Rambo: First Blood Part II

First Blood

In the Line of Duty: The F.B.I. Murders

Son of Rambow

Spud

当然这个是最简单的一个例子,深入研究可以参考官方文档,很详细:https://elasticsearch-learning ... test/

demo的下载地址如下,都是python脚本,环境需求:python3+,es

https://github.com/o19s/elasti ... /demo

1.准备数据

python prepare.py

下载RankLib.jar (用来训练模型) 和tmdb.json (测试数据集,tmdb的电影数据)

2.导测试数据入es

python index_ml_tmdb.py

3.训练模型

python train.py

训练脚本很简单,但是脚本里面有丰富的实现,下面介绍下主要方法。

load_features(FEATURE_SET_NAME)

这个是读取特征信息,demo定义了两个特征,分别在1.json

{

"query": {

"match": {

"title": "{{keywords}}"

}

}

}{

"query": {

"match": {

"overview": "{{keywords}}"

}

}

}movieJudgments = judgments_by_qid(judgments_from_file(filename=JUDGMENTS_FILE))4 qid:1 # 7555 Rambo

3 qid:1 # 1370 Rambo III

3 qid:1 # 1369 Rambo: First Blood Part II

3 qid:1 # 1368 First Blood

0 qid:1 # 136278 Blood

4 qid:2 # 1366 Rocky

3 qid:2 # 1246 Rocky Balboa

3 qid:2 # 60375 Rocky VI

3 qid:2 # 1371 Rocky III

3 qid:2 # 1375 Rocky V

log_features(es, judgments_dict=movieJudgments, search_index=INDEX_NAME)

build_features_judgments_file(movieJudgments, filename=JUDGMENTS_FILE_FEATURES)生成完的特征集如下:

4 qid:1 1:12.318446 2:10.573845 # 7555 rambo

3 qid:1 1:10.357836 2:11.950331 # 1370 rambo

3 qid:1 1:7.0104666 2:11.220029 # 1369 rambo

3 qid:1 1:0.0 2:11.220029 # 1368 rambo

0 qid:1 1:0.0 2:0.0 # 136278 rambo

4 qid:2 1:10.686367 2:8.814796 # 1366 rocky

3 qid:2 1:8.985519 2:9.984467 # 1246 rocky

3 qid:2 1:8.985519 2:8.067647 # 60375 rocky

3 qid:2 1:8.985519 2:5.6604943 # 1371 rocky

3 qid:2 1:8.985519 2:7.3007236 # 1375 rocky

特征集出来后就是训练了,demo提供10总不同的算法,训练好之后把结果传到es提供服务

for modelType in [0, 1, 2, 3, 4, 5, 6, 7, 8, 9]:

# 0, MART

# 1, RankNet

# 2, RankBoost

# 3, AdaRank

# 4, coord Ascent

# 6, LambdaMART

# 7, ListNET

# 8, Random Forests

# 9, Linear Regression

Logger.logger.info("*** Training %s " % modelType)

train_model(judgments_with_features_file=JUDGMENTS_FILE_FEATURES, model_output='model.txt',

which_model=modelType)

save_model(script_name="test_%s" % modelType, feature_set=FEATURE_SET_NAME, model_fname='model.txt')python search.py Rambo

搜索时主要用到了es里面的rescore特性,就是对前面topn条记录根据模型进行再排序,查询dsl如下:

{

"query": {

"multi_match": {

"query": "Rambo",

"fields": ["title", "overview"]

}

},

"rescore": {

"query": {

"rescore_query": {

"sltr": {

"params": {

"keywords": "Rambo"

},

"model": "test_1",

}

}

}

}

}得到结果

Rambo

Rambo III

Rambo: First Blood Part II

First Blood

In the Line of Duty: The F.B.I. Murders

Son of Rambow

Spud

当然这个是最简单的一个例子,深入研究可以参考官方文档,很详细:https://elasticsearch-learning ... test/

收起阅读 »

社区日报 第482期 (2018-12-18)

http://t.cn/EUFd8Yd

2、ElasticsearchSQL用法详解。

http://t.cn/EUFdu9z

3、在滴滴云DC2云服务器上搭建ELK。

http://t.cn/EUFdrKb

编辑:叮咚光军

归档:https://elasticsearch.cn/article/6207

订阅:https://tinyletter.com/elastic-daily

http://t.cn/EUFd8Yd

2、ElasticsearchSQL用法详解。

http://t.cn/EUFdu9z

3、在滴滴云DC2云服务器上搭建ELK。

http://t.cn/EUFdrKb

编辑:叮咚光军

归档:https://elasticsearch.cn/article/6207

订阅:https://tinyletter.com/elastic-daily 收起阅读 »

Day 18: 记filebeat内存泄漏问题分析及调优

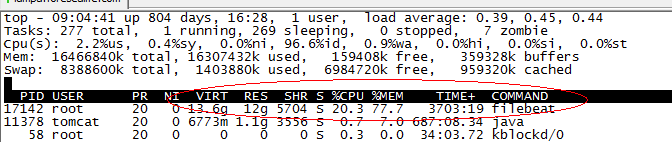

ELK 从发布5.0之后加入了beats套件之后,就改名叫做elastic stack了。beats是一组轻量级的软件,给我们提供了简便,快捷的方式来实时收集、丰富更多的数据用以支撑我们的分析。但由于beats都需要安装在ELK集群之外,在宿主机之上,其对宿主机的性能的影响往往成为了考量其是否能被使用的关键,而不是它到底提供了什么样的功能。因为业务的稳定运行才是核心KPI,而其他因运维而生的数据永远是更低的优先级。影响宿主机性能的方面可能有很多,比如CPU占用率,网络吞吐占用率,磁盘IO,内存等,这里我们详细讨论一下内存泄漏的问题

@[toc]

filebeat是beats套件的核心组件之一(另一个核心是metricbeat),用于采集文件内容并发送到收集端(ES),它一般安装在宿主机上,即生成文件的机器。根据文档的描述,filebeat是不建议用来采集NFS(网络共享磁盘)上的数据的,因此,我们这里只讨论filebeat对本地文件进行采集时的性能情况。

当filebeat部署和运行之后,必定会对cpu,内存,网络等资源产生一定的消耗,当这种消耗能够限定在一个可接受的范围时,在企业内部的生产服务器上大规模部署filebeat是可行的。但如果出现一些非预期的情况,比如占用了大量的内存,那么运维团队肯定是优先保障核心业务的资源,把filebeat进程给杀了。很可惜的是,内存泄漏的问题,从filebeat的诞生到现在就一直没有完全解决过。(可以区社区讨论贴看看,直到现在V6.5.1都还有人在报告内存泄漏的问题)。在特定的场景和配置下,内存占用过多已经成为了抑止filebeat大规模部署的主要问题了。在这里,我主要描述一下我碰到的在filebeat 6.0上遇到的问题。

问题场景和配置

一开始我们在很多机器上部署了filebeat,并且使用了一套统一无差别的的简单配置。对于想要在企业内部大规模推广filebeat的同学来说,这是大忌!!! 合理的方式是具体问题具体分析,需对每台机器上产生文件的方式和rotate的方式进行充分的调研,针对不同的场景是做定制化的配置。以下是我们之前使用的配置:

multiline,多行的配置,当日志文件不符合规范,大量的匹配pattern的时候,会造成内存泄漏max_procs,限制filebeat的进程数量,其实是内核数,建议手动设为1

filebeat.prospectors:

- type: log

enabled: true

paths:

- /qhapp/*/*.log

tail_files: true

multiline.pattern: '^[[:space:]]+|^Caused by:|^.+Exception:|^\d+\serror'

multiline.negate: false

multiline.match: after

fields:

app_id: bi_lass

service: "{{ hostvars[inventory_hostname]['service'] }}"

ip_address: "{{ hostvars[inventory_hostname]['ansible_host'] }}"

topic: qh_app_raw_log

filebeat.config.modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

setup.template.settings:

index.number_of_shards: 3

#index.codec: best_compression

#_source.enabled: false

output.kafka:

enabled: true

hosts: [{{kafka_url}}]

topic: '%{[fields][topic]}'

max_procs: 1

注意,以上的配置中,仅仅对cpu的内核数进行了限制,而没有对内存的使用率进行特殊的限制。从配置层面来说,影响filebeat内存使用情况的指标主要有两个:

queue.mem.events消息队列的大小,默认值是4096,这个参数在6.0以前的版本是spool-size,通过命令行,在启动时进行配置max_message_bytes单条消息的大小, 默认值是10M

filebeat最大的可能占用的内存是max_message_bytes * queue.mem.events = 40G,考虑到这个queue是用于存储encode过的数据,raw数据也是要存储的,所以,在没有对内存进行限制的情况下,最大的内存占用情况是可以达到超过80G。

因此,建议是同时对filebeat的CPU和内存进行限制。

下面,我们看看,使用以上的配置在什么情况下会观测到内存泄漏

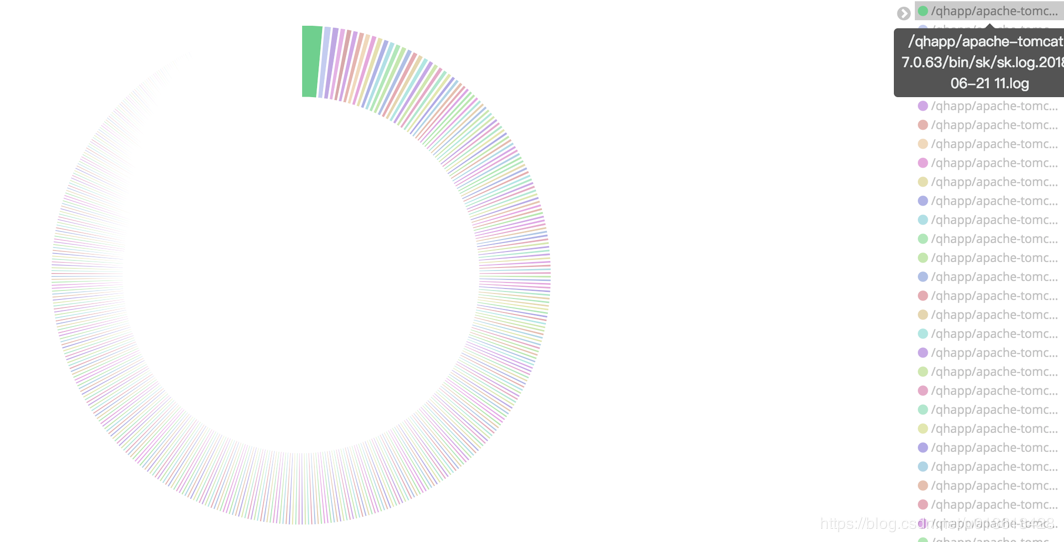

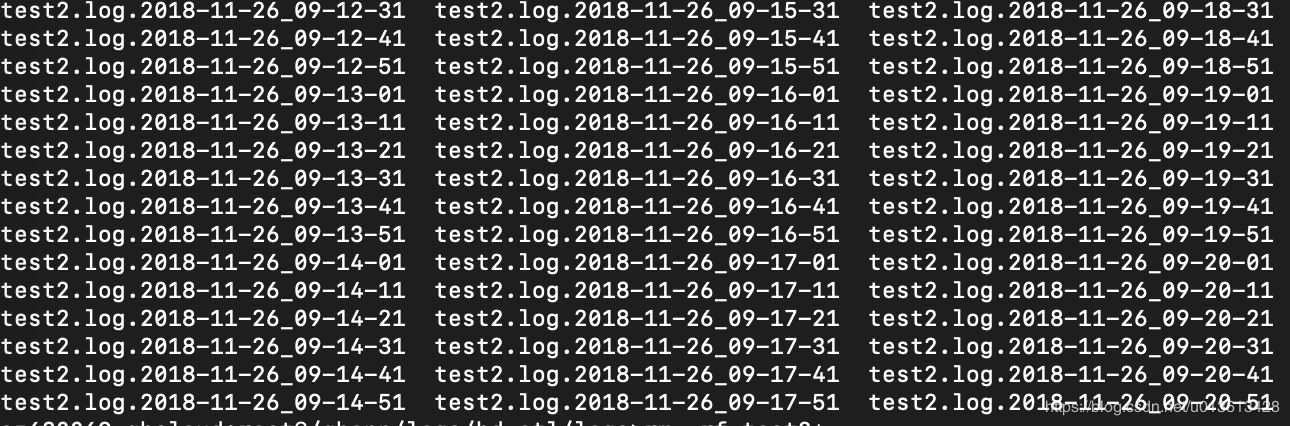

监控文件过多

对于实时大量产生内容的文件,比如日志,常用的做法往往是将日志文件进行rotate,根据策略的不同,每隔一段时间或者达到固定大小之后,将日志rotate。 这样,在文件目录下可能会产生大量的日志文件。 如果我们使用通配符的方式,去监控该目录,则filebeat会启动大量的harvester实例去采集文件。但是,请记住,我这里不是说这样一定会产生内存泄漏,只是在这里观测到了内存泄漏而已,不是说这是造成内存泄漏的原因。

当filebeat运行了几个月之后,占用了超过10个G的内存

非常频繁的rotate日志

另一个可能是,filebeat只配置监控了一个文件,比如test2.log,但由于test2.log不停的rotate出新的文件,虽然没有使用通配符采集该目录下的所有文件,但因为linux系统是使用inode number来唯一标示文件的,rotate出来的新文件并没有改变其inode number,因此,时间上filebeat还是同时开启了对多个文件的监控。

另外,因为对文件进行rotate的时候,一般会限制rotate的个数,即到达一定数量时,新rotate一个文件,必然会删除一个旧的文件,文件删除之后,inode number是可以复用的,如果不巧,新rotate出来的文件被分配了一个之前已删掉文件的inode number,而此时filebeat还没有监测之前持有该inode number的文件已删除,则会抛出以下异常:

2018-11-21T18:06:55+08:00 ERR Harvester could not be started on truncated file: /qhapp/logs/bd-etl/logs/test2.log, Err: Error setting up harvester: Harvester setup failed. Unexpected file opening error: file info is not identical with opened file. Aborting harvesting and retrying file later again而类似Harvester setup failed.的异常会导致内存泄漏

https://github.com/elastic/beats/issues/6797

因为multiline导致内存占用过多

multiline.pattern: '^[[:space:]]+|^Caused by:|^.+Exception:|^\d+\serror,比如这个配置,认为空格或者制表符开头的line是上一行的附加内容,需要作为多行模式,存储到同一个event当中。当你监控的文件刚巧在文件的每一行带有一个空格时,会错误的匹配多行,造成filebeat解析过后,单条event的行数达到了上千行,大小达到了10M,并且在这过程中使用的是正则表达式,每一条event的处理都会极大的消耗内存。因为大多数的filebeat output是需应答的,buffer这些event必然会大量的消耗内存。

模拟场景

这里不多说,简单来一段python的代码:

[loggers]

keys=root

[handlers]

keys=NormalHandler

[formatters]

keys=formatter

[logger_root]

level=DEBUG

handlers=NormalHandler

[handler_NormalHandler]

class=logging.handlers.TimedRotatingFileHandler

formatter=formatter

args=('./test2.log', 'S', 10, 200)

[formatter_formatter]

format=%(asctime)s %(filename)s[line:%(lineno)d] %(levelname)s %(message)s以上,每隔10秒('S', 'M' = 分钟,'D'= 天)rotate一个文件,一共可以rotate 200个文件。 然后,随便找一段日志,不停的打,以下是330条/秒

import logging

from logging.config import fileConfig

import os

import time

CURRENT_FOLDER = os.path.dirname(os.path.realpath(__file__))

fileConfig(CURRENT_FOLDER + '/logging.ini')

logger = logging.getLogger()

while True:

logger.debug("DEBUG 2018-11-26 09:31:35 com.sunyard.insurance.server.GetImage 43 - 资源请求:date=20181126&file_name=/imagedata/imv2/pool1/images/GXTB/2017/11/14/57/06b6bcdd31763b70b20f56c689e51f5e_1/06b6bcdd31763b70b20f56c689e51f5e_2.syd&file_encrypt=0&token=HUtGGG20GH4GAqq209R9tc9UGtAURR0b DEBUG 2018-11-26 09:31:40 com.sunyard.insurance.scheduler.job.DbEroorHandleJob 26 - [数据库操作异常处理JOB]处理异常文件,本机不运行,退出任务!")

logger.debug("DEBUG 2018-11-26 09:31:35 com.sunyard.insurance.server.GetImage 43 - 资源请求:date=20181126&file_name=/imagedata/imv2/pool1/images/GXTB/2017/11/14/57/06b6bcdd31763b70b20f56c689e51f5e_1/06b6bcdd31763b70b20f56c689e51f5e_2.syd&file_encrypt=0&token=HUtGGG20GH4GAqq209R9tc9UGtAURR0b DEBUG 2018-11-26 09:31:40 com.sunyard.insurance.scheduler.job.DbEroorHandleJob 26 - [数据库操作异常处理JOB]处理异常文件,本机不运行,退出任务!")

logger.debug("DEBUG 2018-11-26 09:31:35 com.sunyard.insurance.server.GetImage 43 - 资源请求:date=20181126&file_name=/imagedata/imv2/pool1/images/GXTB/2017/11/14/57/06b6bcdd31763b70b20f56c689e51f5e_1/06b6bcdd31763b70b20f56c689e51f5e_2.syd&file_encrypt=0&token=HUtGGG20GH4GAqq209R9tc9UGtAURR0b DEBUG 2018-11-26 09:31:40 com.sunyard.insurance.scheduler.job.DbEroorHandleJob 26 - [数据库操作异常处理JOB]处理异常文件,本机不运行,退出任务!")

logger.debug("DEBUG 2018-11-26 09:31:35 com.sunyard.insurance.server.GetImage 43 - 资源请求:date=20181126&file_name=/imagedata/imv2/pool1/images/GXTB/2017/11/14/57/06b6bcdd31763b70b20f56c689e51f5e_1/06b6bcdd31763b70b20f56c689e51f5e_2.syd&file_encrypt=0&token=HUtGGG20GH4GAqq209R9tc9UGtAURR0b DEBUG 2018-11-26 09:31:40 com.sunyard.insurance.scheduler.job.DbEroorHandleJob 26 - [数据库操作异常处理JOB]处理异常文件,本机不运行,退出任务!")

logger.debug("DEBUG 2018-11-26 09:31:35 com.sunyard.insurance.server.GetImage 43 - 资源请求:date=20181126&file_name=/imagedata/imv2/pool1/images/GXTB/2017/11/14/57/06b6bcdd31763b70b20f56c689e51f5e_1/06b6bcdd31763b70b20f56c689e51f5e_2.syd&file_encrypt=0&token=HUtGGG20GH4GAqq209R9tc9UGtAURR0b DEBUG 2018-11-26 09:31:40 com.sunyard.insurance.scheduler.job.DbEroorHandleJob 26 - [数据库操作异常处理JOB]处理异常文件,本机不运行,退出任务!")

logger.debug("DEBUG 2018-11-26 09:31:35 com.sunyard.insurance.server.GetImage 43 - 资源请求:date=20181126&file_name=/imagedata/imv2/pool1/images/GXTB/2017/11/14/57/06b6bcdd31763b70b20f56c689e51f5e_1/06b6bcdd31763b70b20f56c689e51f5e_2.syd&file_encrypt=0&token=HUtGGG20GH4GAqq209R9tc9UGtAURR0b DEBUG 2018-11-26 09:31:40 com.sunyard.insurance.scheduler.job.DbEroorHandleJob 26 - [数据库操作异常处理JOB]处理异常文件,本机不运行,退出任务!")

logger.debug("DEBUG 2018-11-26 09:31:35 com.sunyard.insurance.server.GetImage 43 - 资源请求:date=20181126&file_name=/imagedata/imv2/pool1/images/GXTB/2017/11/14/57/06b6bcdd31763b70b20f56c689e51f5e_1/06b6bcdd31763b70b20f56c689e51f5e_2.syd&file_encrypt=0&token=HUtGGG20GH4GAqq209R9tc9UGtAURR0b DEBUG 2018-11-26 09:31:40 com.sunyard.insurance.scheduler.job.DbEroorHandleJob 26 - [数据库操作异常处理JOB]处理异常文件,本机不运行,退出任务!")

logger.debug("DEBUG 2018-11-26 09:31:35 com.sunyard.insurance.server.GetImage 43 - 资源请求:date=20181126&file_name=/imagedata/imv2/pool1/images/GXTB/2017/11/14/57/06b6bcdd31763b70b20f56c689e51f5e_1/06b6bcdd31763b70b20f56c689e51f5e_2.syd&file_encrypt=0&token=HUtGGG20GH4GAqq209R9tc9UGtAURR0b DEBUG 2018-11-26 09:31:40 com.sunyard.insurance.scheduler.job.DbEroorHandleJob 26 - [数据库操作异常处理JOB]处理异常文件,本机不运行,退出任务!")

logger.debug("DEBUG 2018-11-26 09:31:35 com.sunyard.insurance.server.GetImage 43 - 资源请求:date=20181126&file_name=/imagedata/imv2/pool1/images/GXTB/2017/11/14/57/06b6bcdd31763b70b20f56c689e51f5e_1/06b6bcdd31763b70b20f56c689e51f5e_2.syd&file_encrypt=0&token=HUtGGG20GH4GAqq209R9tc9UGtAURR0b DEBUG 2018-11-26 09:31:40 com.sunyard.insurance.scheduler.job.DbEroorHandleJob 26 - [数据库操作异常处理JOB]处理异常文件,本机不运行,退出任务!")

logger.debug("DEBUG 2018-11-26 09:31:35 com.sunyard.insurance.server.GetImage 43 - 资源请求:date=20181126&file_name=/imagedata/imv2/pool1/images/GXTB/2017/11/14/57/06b6bcdd31763b70b20f56c689e51f5e_1/06b6bcdd31763b70b20f56c689e51f5e_2.syd&file_encrypt=0&token=HUtGGG20GH4GAqq209R9tc9UGtAURR0b DEBUG 2018-11-26 09:31:40 com.sunyard.insurance.scheduler.job.DbEroorHandleJob 26 - [数据库操作异常处理JOB]处理异常文件,本机不运行,退出任务!!@#!@#!@#!@#!@#!@#!@#!@#!@#!@#!@#!#@!!!@##########################################################################################################################################################")

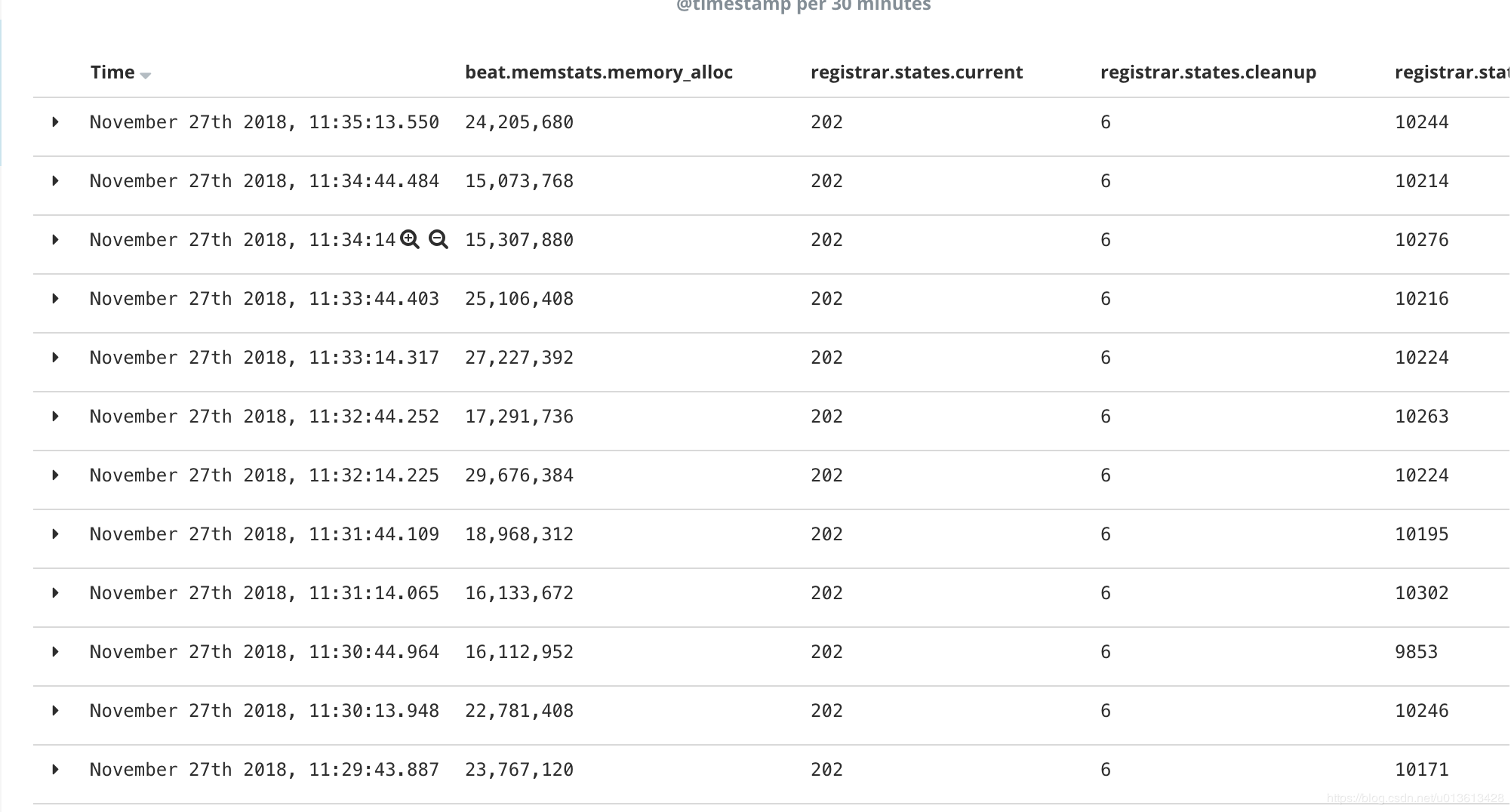

time.sleep(0.03)如何观察filebeat的内存

在6.3版本之前,我们是无法通过xpack的monitoring功能来观察beats套件的性能的。因此,这里讨论的是没有monitoring时,我们如何去检测filebeat的性能。当然,简单的方法是通过top,ps等操作系统的命令进行查看,但这些都是实时的,无法做趋势的观察,并且都是进程级别的,无法看到filebeat内部的真是情况。因此,这里介绍如何通过filebeat的日志和pprof这个工具来观察内存的使用情况

通过filebeat的日志

filebeat文件解读

其实filebeat的日志,已经包含了很多参数用于实时观测filebeat的资源使用情况,以下是filebeat的一个日志片段(这里的日志片段是6.0版本的,6.3版本之后,整个日志格式变了,从kv格式变成了json对象格式,xpack可以直接通过日志进行filebeat的monitoring):

2018-11-02T17:40:01+08:00 INFO Non-zero metrics in the last 30s: beat.memstats.gc_next=623475680 beat.memstats.memory_alloc=391032232 beat.memstats.memory_total=155885103371024 filebeat.events.active=-402 filebeat.events.added=13279 filebeat.events.done=13681 filebeat.harvester.closed=1 filebeat.harvester.open_files=7 filebeat.harvester.running=7 filebeat.harvester.started=2 libbeat.config.module.running=0 libbeat.output.events.acked=13677 libbeat.output.events.batches=28 libbeat.output.events.total=13677 libbeat.outputs.kafka.bytes_read=12112 libbeat.outputs.kafka.bytes_write=1043381 libbeat.pipeline.clients=1 libbeat.pipeline.events.active=0 libbeat.pipeline.events.filtered=4 libbeat.pipeline.events.published=13275 libbeat.pipeline.events.total=13279 libbeat.pipeline.queue.acked=13677 registrar.states.cleanup=1 registrar.states.current=8 registrar.states.update=13681 registrar.writes=28

里面的参数主要分成三个部分:

beat.*,包含memstats.gc_next,memstats.memory_alloc,memstats.memory_total,这个是所有beat组件都有的指标,是filebeat继承来的,主要是内存相关的,我们这里特别关注memstats.memory_alloc,alloc的越多,占用内存越大filebeat.*,这部分是filebeat特有的指标,通过event相关的指标,我们知道吞吐,通过harvester,我们知道正在监控多少个文件,未消费event堆积的越多,havester创建的越多,消耗内存越大libbeat.*,也是beats组件通用的指标,包含outputs和pipeline等信息。这里要主要当outputs发生阻塞的时候,会直接影响queue里面event的消费,造成内存堆积registrar,filebeat将监控文件的状态放在registry文件里面,当监控文件非常多的时候,比如10万个,而且没有合理的设置close_inactive参数,这个文件能达到100M,载入内存后,直接占用内存

filebeat日志解析

当然,我们不可能直接去读这个日志,既然我们使用ELK,肯定是用ELK进行解读。因为是kv格式,很方便,用logstash的kv plugin:

filter {

kv {}

}kv无法指定properties的type,所以,我们需要稍微指定了一下索引的模版:

PUT _template/template_1

{

"index_patterns": ["filebeat*"],

"settings": {

"number_of_shards": 1

},

"mappings": {

"doc": {

"_source": {

"enabled": false

},

"dynamic_templates": [

{

"longs_as_strings": {

"match_mapping_type": "string",

"path_match": "*beat.*",

"path_unmatch": "*.*name",

"mapping": {

"type": "long"

}

}

}

]

}

}

}上面的模版,将kv解析出的properties都mapping到long类型,但注意"path_match": "*beat.*"无法match到registrar的指标,读者可以自己写一个更完善的mapping。

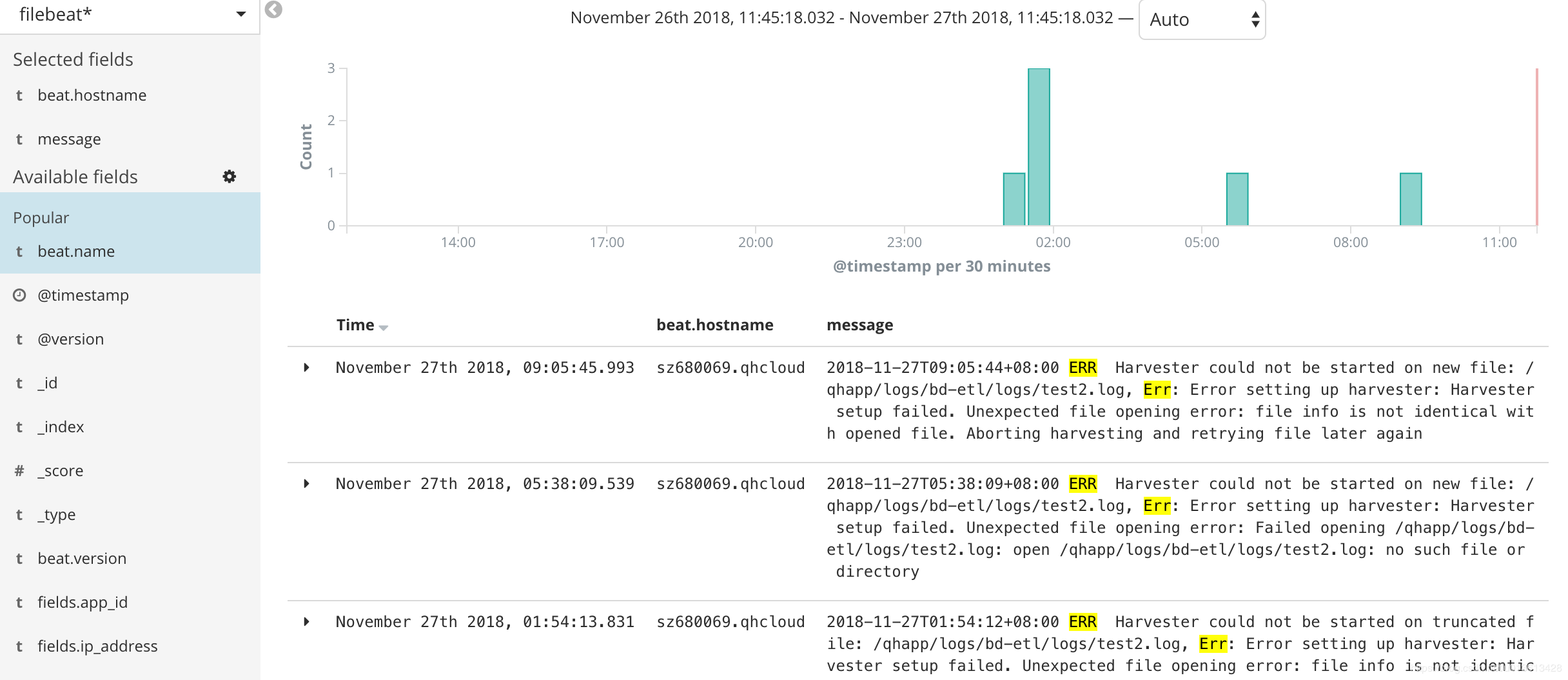

这样,我们就可以通过kibana可视化组件,清楚的看到内存泄漏的过程

以及资源的使用情况:

将信息可视化之后,我们可以明显的发现,内存的突变和ERR是同时发生的

即以下error:

2018-11-27T09:05:44+08:00 ERR Harvester could not be started on new file: /qhapp/logs/bd-etl/logs/test2.log, Err: Error setting up harvester: Harvester setup failed. Unexpected file opening error: file info is not identical with opened file. Aborting harvesting and retrying file later again

会导致filebeat突然申请了额外的内存。具体请查看issue

通过pprof

众所周知,filebeat是用go语言实现的,而go语言本身的基础库里面就包含pprof这个功能极其强大的性能分析工具,只是这个工具是用于debug的,在正常模式下,filebeat是不会启动这个选贤的,并且很遗憾,在官方文档里面根本没有提及我们可以使用pprof来观测filebeat。我们接下来可以通过6.3上修复的一个内存泄漏的issue,来学习怎么使用pprof进行分析

启动pprof监测

首先,需要让filebeat在启动的时候运行pprof,具体的做法是在启动是加上参数-httpprof localhost:6060,即/usr/share/filebeat/bin/filebeat -c /etc/filebeat/filebeat.yml -path.home /usr/share/filebeat -path.config /etc/filebeat -path.data /var/lib/filebeat -path.logs /var/log/filebeat -httpprof localhost:6060。这里只绑定了localhost,无法通过远程访问,如果想远程访问,应该使用0.0.0.0。

这时,你就可以通过curl http://localhost:6060/debug/pprof/heap > profile.txt等命令,获取filebeat的实时堆栈信息了。

远程连接

当然,你也可以通过在你的本地电脑上安装go,然后通过go tool远程连接pprof。

因为我们是需要研究内存的问题,所以以下连接访问的是/heap子路径

go tool pprof http://10.60.x.x:6060/debug/pprof/heap

top 命令

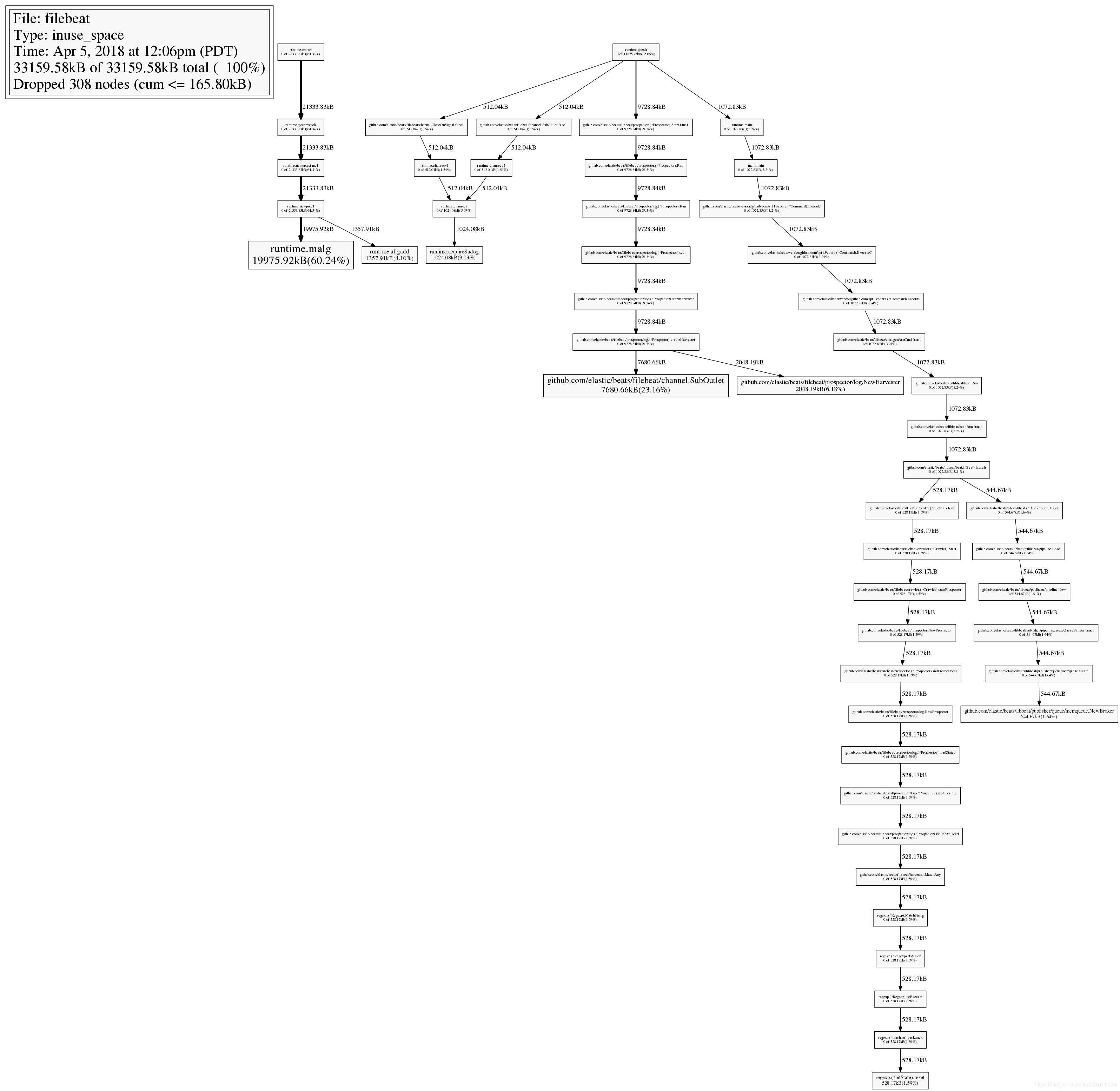

连接之后,你可以通过top命令,查看消耗内存最多的几个实例:

33159.58kB of 33159.58kB total ( 100%)

Dropped 308 nodes (cum <= 165.80kB)

Showing top 10 nodes out of 51 (cum >= 512.04kB)

flat flat% sum% cum cum%

19975.92kB 60.24% 60.24% 19975.92kB 60.24% runtime.malg

7680.66kB 23.16% 83.40% 7680.66kB 23.16% github.com/elastic/beats/filebeat/channel.SubOutlet

2048.19kB 6.18% 89.58% 2048.19kB 6.18% github.com/elastic/beats/filebeat/prospector/log.NewHarvester

1357.91kB 4.10% 93.68% 1357.91kB 4.10% runtime.allgadd

1024.08kB 3.09% 96.76% 1024.08kB 3.09% runtime.acquireSudog

544.67kB 1.64% 98.41% 544.67kB 1.64% github.com/elastic/beats/libbeat/publisher/queue/memqueue.NewBroker

528.17kB 1.59% 100% 528.17kB 1.59% regexp.(*bitState).reset

0 0% 100% 528.17kB 1.59% github.com/elastic/beats/filebeat/beater.(*Filebeat).Run

0 0% 100% 512.04kB 1.54% github.com/elastic/beats/filebeat/channel.CloseOnSignal.func1

0 0% 100% 512.04kB 1.54% github.com/elastic/beats/filebeat/channel.SubOutlet.func1查看堆栈调用图

输入web命令,会生产堆栈调用关系的svg图,在这个svg图中,你可以结合top命令一起查看,在top中,我们已经知道github.com/elastic/beats/filebeat/channel.SubOutlet占用了很多的内存,在图中,展现的是调用关系栈,你可以看到这个类是怎么被实例化的,并且在整个堆中,内存是怎么分布的。最直观的是,实例所处的长方形面积越大,代表占用的内存越多。:

查看源码

通过list命令,可以迅速查看可以实例的问题源码,比如在之前的top10命令中,我们已经看到github.com/elastic/beats/filebeat/channel.SubOutlet这个类的实例占用了大量的内存,我们可以通过list做进一步的分析,看看这个类内部在哪个语句开始出现内存的占用:

(pprof) list SubOutlet

Total: 32.38MB

ROUTINE ======================== github.com/elastic/beats/filebeat/channel.SubOutlet in /home/jeremy/src/go/src/github.com/elastic/beats/filebeat/channel/util.go

7.50MB 7.50MB (flat, cum) 23.16% of Total

. . 15:// SubOutlet create a sub-outlet, which can be closed individually, without closing the

. . 16:// underlying outlet.

. . 17:func SubOutlet(out Outleter) Outleter {

. . 18: s := &subOutlet{

. . 19: isOpen: atomic.MakeBool(true),

1MB 1MB 20: done: make(chan struct{}),

2MB 2MB 21: ch: make(chan *util.Data),

4.50MB 4.50MB 22: res: make(chan bool, 1),

. . 23: }

. . 24:

. . 25: go func() {

. . 26: for event := range s.ch {

. . 27: s.res <- out.OnEvent(event) 如何调优

其实调优的过程就是调整参数的过程,之前说过了,和内存相关的参数, max_message_bytes,queue.mem.events,queue.mem.flush.min_events,以及队列占用内存的公式:max_message_bytes * queue.mem.events

output.kafka:

enabled: true

# max_message_bytes: 1000000

hosts: ["10.60.x.x:9092"]

topic: '%{[fields][topic]}'

max_procs: 1

#queue.mem.events: 256

#queue.mem.flush.min_events: 128但其实,不同的环境下,不同的原因都可能会造成filebeat占用的内存过大,此时,需要仔细的确认你的上下文环境:

- 是否因为通配符的原因,造成同时监控数量巨大的文件,这种情况应该避免用通配符监控无用的文件。

- 是否文件的单行内容巨大,确定是否需要改造文件内容,或者将其过滤

- 是否过多的匹配了multiline的pattern,并且多行的event是否单条体积过大。这时,就需要暂时关闭multiline,修改文件内容或者multiline的pattern。

- 是否output经常阻塞,event queue里面总是一直缓存event。这时要检查你的网络环境或者消息队列等中间件是否正常

ELK 从发布5.0之后加入了beats套件之后,就改名叫做elastic stack了。beats是一组轻量级的软件,给我们提供了简便,快捷的方式来实时收集、丰富更多的数据用以支撑我们的分析。但由于beats都需要安装在ELK集群之外,在宿主机之上,其对宿主机的性能的影响往往成为了考量其是否能被使用的关键,而不是它到底提供了什么样的功能。因为业务的稳定运行才是核心KPI,而其他因运维而生的数据永远是更低的优先级。影响宿主机性能的方面可能有很多,比如CPU占用率,网络吞吐占用率,磁盘IO,内存等,这里我们详细讨论一下内存泄漏的问题

@[toc]

filebeat是beats套件的核心组件之一(另一个核心是metricbeat),用于采集文件内容并发送到收集端(ES),它一般安装在宿主机上,即生成文件的机器。根据文档的描述,filebeat是不建议用来采集NFS(网络共享磁盘)上的数据的,因此,我们这里只讨论filebeat对本地文件进行采集时的性能情况。

当filebeat部署和运行之后,必定会对cpu,内存,网络等资源产生一定的消耗,当这种消耗能够限定在一个可接受的范围时,在企业内部的生产服务器上大规模部署filebeat是可行的。但如果出现一些非预期的情况,比如占用了大量的内存,那么运维团队肯定是优先保障核心业务的资源,把filebeat进程给杀了。很可惜的是,内存泄漏的问题,从filebeat的诞生到现在就一直没有完全解决过。(可以区社区讨论贴看看,直到现在V6.5.1都还有人在报告内存泄漏的问题)。在特定的场景和配置下,内存占用过多已经成为了抑止filebeat大规模部署的主要问题了。在这里,我主要描述一下我碰到的在filebeat 6.0上遇到的问题。

问题场景和配置

一开始我们在很多机器上部署了filebeat,并且使用了一套统一无差别的的简单配置。对于想要在企业内部大规模推广filebeat的同学来说,这是大忌!!! 合理的方式是具体问题具体分析,需对每台机器上产生文件的方式和rotate的方式进行充分的调研,针对不同的场景是做定制化的配置。以下是我们之前使用的配置:

multiline,多行的配置,当日志文件不符合规范,大量的匹配pattern的时候,会造成内存泄漏max_procs,限制filebeat的进程数量,其实是内核数,建议手动设为1

filebeat.prospectors:

- type: log

enabled: true

paths:

- /qhapp/*/*.log

tail_files: true

multiline.pattern: '^[[:space:]]+|^Caused by:|^.+Exception:|^\d+\serror'

multiline.negate: false

multiline.match: after

fields:

app_id: bi_lass

service: "{{ hostvars[inventory_hostname]['service'] }}"

ip_address: "{{ hostvars[inventory_hostname]['ansible_host'] }}"

topic: qh_app_raw_log

filebeat.config.modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

setup.template.settings:

index.number_of_shards: 3

#index.codec: best_compression

#_source.enabled: false

output.kafka:

enabled: true

hosts: [{{kafka_url}}]

topic: '%{[fields][topic]}'

max_procs: 1

注意,以上的配置中,仅仅对cpu的内核数进行了限制,而没有对内存的使用率进行特殊的限制。从配置层面来说,影响filebeat内存使用情况的指标主要有两个:

queue.mem.events消息队列的大小,默认值是4096,这个参数在6.0以前的版本是spool-size,通过命令行,在启动时进行配置max_message_bytes单条消息的大小, 默认值是10M

filebeat最大的可能占用的内存是max_message_bytes * queue.mem.events = 40G,考虑到这个queue是用于存储encode过的数据,raw数据也是要存储的,所以,在没有对内存进行限制的情况下,最大的内存占用情况是可以达到超过80G。

因此,建议是同时对filebeat的CPU和内存进行限制。

下面,我们看看,使用以上的配置在什么情况下会观测到内存泄漏

监控文件过多

对于实时大量产生内容的文件,比如日志,常用的做法往往是将日志文件进行rotate,根据策略的不同,每隔一段时间或者达到固定大小之后,将日志rotate。 这样,在文件目录下可能会产生大量的日志文件。 如果我们使用通配符的方式,去监控该目录,则filebeat会启动大量的harvester实例去采集文件。但是,请记住,我这里不是说这样一定会产生内存泄漏,只是在这里观测到了内存泄漏而已,不是说这是造成内存泄漏的原因。

当filebeat运行了几个月之后,占用了超过10个G的内存

非常频繁的rotate日志

另一个可能是,filebeat只配置监控了一个文件,比如test2.log,但由于test2.log不停的rotate出新的文件,虽然没有使用通配符采集该目录下的所有文件,但因为linux系统是使用inode number来唯一标示文件的,rotate出来的新文件并没有改变其inode number,因此,时间上filebeat还是同时开启了对多个文件的监控。

另外,因为对文件进行rotate的时候,一般会限制rotate的个数,即到达一定数量时,新rotate一个文件,必然会删除一个旧的文件,文件删除之后,inode number是可以复用的,如果不巧,新rotate出来的文件被分配了一个之前已删掉文件的inode number,而此时filebeat还没有监测之前持有该inode number的文件已删除,则会抛出以下异常:

2018-11-21T18:06:55+08:00 ERR Harvester could not be started on truncated file: /qhapp/logs/bd-etl/logs/test2.log, Err: Error setting up harvester: Harvester setup failed. Unexpected file opening error: file info is not identical with opened file. Aborting harvesting and retrying file later again而类似Harvester setup failed.的异常会导致内存泄漏

https://github.com/elastic/beats/issues/6797

因为multiline导致内存占用过多

multiline.pattern: '^[[:space:]]+|^Caused by:|^.+Exception:|^\d+\serror,比如这个配置,认为空格或者制表符开头的line是上一行的附加内容,需要作为多行模式,存储到同一个event当中。当你监控的文件刚巧在文件的每一行带有一个空格时,会错误的匹配多行,造成filebeat解析过后,单条event的行数达到了上千行,大小达到了10M,并且在这过程中使用的是正则表达式,每一条event的处理都会极大的消耗内存。因为大多数的filebeat output是需应答的,buffer这些event必然会大量的消耗内存。

模拟场景

这里不多说,简单来一段python的代码:

[loggers]

keys=root

[handlers]

keys=NormalHandler

[formatters]

keys=formatter

[logger_root]

level=DEBUG

handlers=NormalHandler

[handler_NormalHandler]

class=logging.handlers.TimedRotatingFileHandler

formatter=formatter

args=('./test2.log', 'S', 10, 200)

[formatter_formatter]

format=%(asctime)s %(filename)s[line:%(lineno)d] %(levelname)s %(message)s以上,每隔10秒('S', 'M' = 分钟,'D'= 天)rotate一个文件,一共可以rotate 200个文件。 然后,随便找一段日志,不停的打,以下是330条/秒

import logging

from logging.config import fileConfig

import os

import time

CURRENT_FOLDER = os.path.dirname(os.path.realpath(__file__))

fileConfig(CURRENT_FOLDER + '/logging.ini')

logger = logging.getLogger()

while True:

logger.debug("DEBUG 2018-11-26 09:31:35 com.sunyard.insurance.server.GetImage 43 - 资源请求:date=20181126&file_name=/imagedata/imv2/pool1/images/GXTB/2017/11/14/57/06b6bcdd31763b70b20f56c689e51f5e_1/06b6bcdd31763b70b20f56c689e51f5e_2.syd&file_encrypt=0&token=HUtGGG20GH4GAqq209R9tc9UGtAURR0b DEBUG 2018-11-26 09:31:40 com.sunyard.insurance.scheduler.job.DbEroorHandleJob 26 - [数据库操作异常处理JOB]处理异常文件,本机不运行,退出任务!")

logger.debug("DEBUG 2018-11-26 09:31:35 com.sunyard.insurance.server.GetImage 43 - 资源请求:date=20181126&file_name=/imagedata/imv2/pool1/images/GXTB/2017/11/14/57/06b6bcdd31763b70b20f56c689e51f5e_1/06b6bcdd31763b70b20f56c689e51f5e_2.syd&file_encrypt=0&token=HUtGGG20GH4GAqq209R9tc9UGtAURR0b DEBUG 2018-11-26 09:31:40 com.sunyard.insurance.scheduler.job.DbEroorHandleJob 26 - [数据库操作异常处理JOB]处理异常文件,本机不运行,退出任务!")

logger.debug("DEBUG 2018-11-26 09:31:35 com.sunyard.insurance.server.GetImage 43 - 资源请求:date=20181126&file_name=/imagedata/imv2/pool1/images/GXTB/2017/11/14/57/06b6bcdd31763b70b20f56c689e51f5e_1/06b6bcdd31763b70b20f56c689e51f5e_2.syd&file_encrypt=0&token=HUtGGG20GH4GAqq209R9tc9UGtAURR0b DEBUG 2018-11-26 09:31:40 com.sunyard.insurance.scheduler.job.DbEroorHandleJob 26 - [数据库操作异常处理JOB]处理异常文件,本机不运行,退出任务!")

logger.debug("DEBUG 2018-11-26 09:31:35 com.sunyard.insurance.server.GetImage 43 - 资源请求:date=20181126&file_name=/imagedata/imv2/pool1/images/GXTB/2017/11/14/57/06b6bcdd31763b70b20f56c689e51f5e_1/06b6bcdd31763b70b20f56c689e51f5e_2.syd&file_encrypt=0&token=HUtGGG20GH4GAqq209R9tc9UGtAURR0b DEBUG 2018-11-26 09:31:40 com.sunyard.insurance.scheduler.job.DbEroorHandleJob 26 - [数据库操作异常处理JOB]处理异常文件,本机不运行,退出任务!")

logger.debug("DEBUG 2018-11-26 09:31:35 com.sunyard.insurance.server.GetImage 43 - 资源请求:date=20181126&file_name=/imagedata/imv2/pool1/images/GXTB/2017/11/14/57/06b6bcdd31763b70b20f56c689e51f5e_1/06b6bcdd31763b70b20f56c689e51f5e_2.syd&file_encrypt=0&token=HUtGGG20GH4GAqq209R9tc9UGtAURR0b DEBUG 2018-11-26 09:31:40 com.sunyard.insurance.scheduler.job.DbEroorHandleJob 26 - [数据库操作异常处理JOB]处理异常文件,本机不运行,退出任务!")

logger.debug("DEBUG 2018-11-26 09:31:35 com.sunyard.insurance.server.GetImage 43 - 资源请求:date=20181126&file_name=/imagedata/imv2/pool1/images/GXTB/2017/11/14/57/06b6bcdd31763b70b20f56c689e51f5e_1/06b6bcdd31763b70b20f56c689e51f5e_2.syd&file_encrypt=0&token=HUtGGG20GH4GAqq209R9tc9UGtAURR0b DEBUG 2018-11-26 09:31:40 com.sunyard.insurance.scheduler.job.DbEroorHandleJob 26 - [数据库操作异常处理JOB]处理异常文件,本机不运行,退出任务!")

logger.debug("DEBUG 2018-11-26 09:31:35 com.sunyard.insurance.server.GetImage 43 - 资源请求:date=20181126&file_name=/imagedata/imv2/pool1/images/GXTB/2017/11/14/57/06b6bcdd31763b70b20f56c689e51f5e_1/06b6bcdd31763b70b20f56c689e51f5e_2.syd&file_encrypt=0&token=HUtGGG20GH4GAqq209R9tc9UGtAURR0b DEBUG 2018-11-26 09:31:40 com.sunyard.insurance.scheduler.job.DbEroorHandleJob 26 - [数据库操作异常处理JOB]处理异常文件,本机不运行,退出任务!")

logger.debug("DEBUG 2018-11-26 09:31:35 com.sunyard.insurance.server.GetImage 43 - 资源请求:date=20181126&file_name=/imagedata/imv2/pool1/images/GXTB/2017/11/14/57/06b6bcdd31763b70b20f56c689e51f5e_1/06b6bcdd31763b70b20f56c689e51f5e_2.syd&file_encrypt=0&token=HUtGGG20GH4GAqq209R9tc9UGtAURR0b DEBUG 2018-11-26 09:31:40 com.sunyard.insurance.scheduler.job.DbEroorHandleJob 26 - [数据库操作异常处理JOB]处理异常文件,本机不运行,退出任务!")

logger.debug("DEBUG 2018-11-26 09:31:35 com.sunyard.insurance.server.GetImage 43 - 资源请求:date=20181126&file_name=/imagedata/imv2/pool1/images/GXTB/2017/11/14/57/06b6bcdd31763b70b20f56c689e51f5e_1/06b6bcdd31763b70b20f56c689e51f5e_2.syd&file_encrypt=0&token=HUtGGG20GH4GAqq209R9tc9UGtAURR0b DEBUG 2018-11-26 09:31:40 com.sunyard.insurance.scheduler.job.DbEroorHandleJob 26 - [数据库操作异常处理JOB]处理异常文件,本机不运行,退出任务!")

logger.debug("DEBUG 2018-11-26 09:31:35 com.sunyard.insurance.server.GetImage 43 - 资源请求:date=20181126&file_name=/imagedata/imv2/pool1/images/GXTB/2017/11/14/57/06b6bcdd31763b70b20f56c689e51f5e_1/06b6bcdd31763b70b20f56c689e51f5e_2.syd&file_encrypt=0&token=HUtGGG20GH4GAqq209R9tc9UGtAURR0b DEBUG 2018-11-26 09:31:40 com.sunyard.insurance.scheduler.job.DbEroorHandleJob 26 - [数据库操作异常处理JOB]处理异常文件,本机不运行,退出任务!!@#!@#!@#!@#!@#!@#!@#!@#!@#!@#!@#!#@!!!@##########################################################################################################################################################")

time.sleep(0.03)如何观察filebeat的内存

在6.3版本之前,我们是无法通过xpack的monitoring功能来观察beats套件的性能的。因此,这里讨论的是没有monitoring时,我们如何去检测filebeat的性能。当然,简单的方法是通过top,ps等操作系统的命令进行查看,但这些都是实时的,无法做趋势的观察,并且都是进程级别的,无法看到filebeat内部的真是情况。因此,这里介绍如何通过filebeat的日志和pprof这个工具来观察内存的使用情况

通过filebeat的日志

filebeat文件解读

其实filebeat的日志,已经包含了很多参数用于实时观测filebeat的资源使用情况,以下是filebeat的一个日志片段(这里的日志片段是6.0版本的,6.3版本之后,整个日志格式变了,从kv格式变成了json对象格式,xpack可以直接通过日志进行filebeat的monitoring):

2018-11-02T17:40:01+08:00 INFO Non-zero metrics in the last 30s: beat.memstats.gc_next=623475680 beat.memstats.memory_alloc=391032232 beat.memstats.memory_total=155885103371024 filebeat.events.active=-402 filebeat.events.added=13279 filebeat.events.done=13681 filebeat.harvester.closed=1 filebeat.harvester.open_files=7 filebeat.harvester.running=7 filebeat.harvester.started=2 libbeat.config.module.running=0 libbeat.output.events.acked=13677 libbeat.output.events.batches=28 libbeat.output.events.total=13677 libbeat.outputs.kafka.bytes_read=12112 libbeat.outputs.kafka.bytes_write=1043381 libbeat.pipeline.clients=1 libbeat.pipeline.events.active=0 libbeat.pipeline.events.filtered=4 libbeat.pipeline.events.published=13275 libbeat.pipeline.events.total=13279 libbeat.pipeline.queue.acked=13677 registrar.states.cleanup=1 registrar.states.current=8 registrar.states.update=13681 registrar.writes=28

里面的参数主要分成三个部分:

beat.*,包含memstats.gc_next,memstats.memory_alloc,memstats.memory_total,这个是所有beat组件都有的指标,是filebeat继承来的,主要是内存相关的,我们这里特别关注memstats.memory_alloc,alloc的越多,占用内存越大filebeat.*,这部分是filebeat特有的指标,通过event相关的指标,我们知道吞吐,通过harvester,我们知道正在监控多少个文件,未消费event堆积的越多,havester创建的越多,消耗内存越大libbeat.*,也是beats组件通用的指标,包含outputs和pipeline等信息。这里要主要当outputs发生阻塞的时候,会直接影响queue里面event的消费,造成内存堆积registrar,filebeat将监控文件的状态放在registry文件里面,当监控文件非常多的时候,比如10万个,而且没有合理的设置close_inactive参数,这个文件能达到100M,载入内存后,直接占用内存

filebeat日志解析

当然,我们不可能直接去读这个日志,既然我们使用ELK,肯定是用ELK进行解读。因为是kv格式,很方便,用logstash的kv plugin:

filter {

kv {}

}kv无法指定properties的type,所以,我们需要稍微指定了一下索引的模版:

PUT _template/template_1

{

"index_patterns": ["filebeat*"],

"settings": {

"number_of_shards": 1

},

"mappings": {

"doc": {

"_source": {

"enabled": false

},

"dynamic_templates": [

{

"longs_as_strings": {

"match_mapping_type": "string",

"path_match": "*beat.*",

"path_unmatch": "*.*name",

"mapping": {

"type": "long"

}

}

}

]

}

}

}上面的模版,将kv解析出的properties都mapping到long类型,但注意"path_match": "*beat.*"无法match到registrar的指标,读者可以自己写一个更完善的mapping。

这样,我们就可以通过kibana可视化组件,清楚的看到内存泄漏的过程

以及资源的使用情况:

将信息可视化之后,我们可以明显的发现,内存的突变和ERR是同时发生的

即以下error:

2018-11-27T09:05:44+08:00 ERR Harvester could not be started on new file: /qhapp/logs/bd-etl/logs/test2.log, Err: Error setting up harvester: Harvester setup failed. Unexpected file opening error: file info is not identical with opened file. Aborting harvesting and retrying file later again

会导致filebeat突然申请了额外的内存。具体请查看issue

通过pprof

众所周知,filebeat是用go语言实现的,而go语言本身的基础库里面就包含pprof这个功能极其强大的性能分析工具,只是这个工具是用于debug的,在正常模式下,filebeat是不会启动这个选贤的,并且很遗憾,在官方文档里面根本没有提及我们可以使用pprof来观测filebeat。我们接下来可以通过6.3上修复的一个内存泄漏的issue,来学习怎么使用pprof进行分析

启动pprof监测

首先,需要让filebeat在启动的时候运行pprof,具体的做法是在启动是加上参数-httpprof localhost:6060,即/usr/share/filebeat/bin/filebeat -c /etc/filebeat/filebeat.yml -path.home /usr/share/filebeat -path.config /etc/filebeat -path.data /var/lib/filebeat -path.logs /var/log/filebeat -httpprof localhost:6060。这里只绑定了localhost,无法通过远程访问,如果想远程访问,应该使用0.0.0.0。

这时,你就可以通过curl http://localhost:6060/debug/pprof/heap > profile.txt等命令,获取filebeat的实时堆栈信息了。

远程连接

当然,你也可以通过在你的本地电脑上安装go,然后通过go tool远程连接pprof。

因为我们是需要研究内存的问题,所以以下连接访问的是/heap子路径

go tool pprof http://10.60.x.x:6060/debug/pprof/heap

top 命令

连接之后,你可以通过top命令,查看消耗内存最多的几个实例:

33159.58kB of 33159.58kB total ( 100%)

Dropped 308 nodes (cum <= 165.80kB)

Showing top 10 nodes out of 51 (cum >= 512.04kB)

flat flat% sum% cum cum%

19975.92kB 60.24% 60.24% 19975.92kB 60.24% runtime.malg

7680.66kB 23.16% 83.40% 7680.66kB 23.16% github.com/elastic/beats/filebeat/channel.SubOutlet

2048.19kB 6.18% 89.58% 2048.19kB 6.18% github.com/elastic/beats/filebeat/prospector/log.NewHarvester

1357.91kB 4.10% 93.68% 1357.91kB 4.10% runtime.allgadd

1024.08kB 3.09% 96.76% 1024.08kB 3.09% runtime.acquireSudog

544.67kB 1.64% 98.41% 544.67kB 1.64% github.com/elastic/beats/libbeat/publisher/queue/memqueue.NewBroker

528.17kB 1.59% 100% 528.17kB 1.59% regexp.(*bitState).reset

0 0% 100% 528.17kB 1.59% github.com/elastic/beats/filebeat/beater.(*Filebeat).Run

0 0% 100% 512.04kB 1.54% github.com/elastic/beats/filebeat/channel.CloseOnSignal.func1

0 0% 100% 512.04kB 1.54% github.com/elastic/beats/filebeat/channel.SubOutlet.func1查看堆栈调用图

输入web命令,会生产堆栈调用关系的svg图,在这个svg图中,你可以结合top命令一起查看,在top中,我们已经知道github.com/elastic/beats/filebeat/channel.SubOutlet占用了很多的内存,在图中,展现的是调用关系栈,你可以看到这个类是怎么被实例化的,并且在整个堆中,内存是怎么分布的。最直观的是,实例所处的长方形面积越大,代表占用的内存越多。:

查看源码

通过list命令,可以迅速查看可以实例的问题源码,比如在之前的top10命令中,我们已经看到github.com/elastic/beats/filebeat/channel.SubOutlet这个类的实例占用了大量的内存,我们可以通过list做进一步的分析,看看这个类内部在哪个语句开始出现内存的占用:

(pprof) list SubOutlet

Total: 32.38MB

ROUTINE ======================== github.com/elastic/beats/filebeat/channel.SubOutlet in /home/jeremy/src/go/src/github.com/elastic/beats/filebeat/channel/util.go

7.50MB 7.50MB (flat, cum) 23.16% of Total

. . 15:// SubOutlet create a sub-outlet, which can be closed individually, without closing the

. . 16:// underlying outlet.

. . 17:func SubOutlet(out Outleter) Outleter {

. . 18: s := &subOutlet{

. . 19: isOpen: atomic.MakeBool(true),

1MB 1MB 20: done: make(chan struct{}),

2MB 2MB 21: ch: make(chan *util.Data),

4.50MB 4.50MB 22: res: make(chan bool, 1),

. . 23: }

. . 24:

. . 25: go func() {

. . 26: for event := range s.ch {

. . 27: s.res <- out.OnEvent(event) 如何调优

其实调优的过程就是调整参数的过程,之前说过了,和内存相关的参数, max_message_bytes,queue.mem.events,queue.mem.flush.min_events,以及队列占用内存的公式:max_message_bytes * queue.mem.events

output.kafka:

enabled: true

# max_message_bytes: 1000000

hosts: ["10.60.x.x:9092"]

topic: '%{[fields][topic]}'

max_procs: 1

#queue.mem.events: 256

#queue.mem.flush.min_events: 128但其实,不同的环境下,不同的原因都可能会造成filebeat占用的内存过大,此时,需要仔细的确认你的上下文环境:

- 是否因为通配符的原因,造成同时监控数量巨大的文件,这种情况应该避免用通配符监控无用的文件。

- 是否文件的单行内容巨大,确定是否需要改造文件内容,或者将其过滤

- 是否过多的匹配了multiline的pattern,并且多行的event是否单条体积过大。这时,就需要暂时关闭multiline,修改文件内容或者multiline的pattern。

- 是否output经常阻塞,event queue里面总是一直缓存event。这时要检查你的网络环境或者消息队列等中间件是否正常

Day 17 - 关于日志型数据管理策略的思考

近两年随着Elastic Stack的愈发火热,其近乎成为构建实时日志应用的工业标准。在小型数据应用场景,最新的6.5版本已经可以做到开箱即用,无需过多考虑架构上的设计工作。 然而一旦应用规模扩大到数百TB甚至PB的数据量级,整个系统的架构和后期维护工作则显得非常重要。借着2018 Elastic Advent写文的机会,结合过去几年架构和运维公司日志集群的实践经验,对于大规模日志型数据的管理策略,在此做一个总结性的思考。 文中抛出的观点,有些已经在我们的集群中有所应用并取得比较好的效果,有些则还待实践的检验。抛砖引玉,不尽成熟的地方,还请社区各位专家指正。

对于日志系统,最终用户通常有以下几个基本要求:

- 数据从产生到可检索的实时性要求高,可接受的延迟通常要求控制在数秒,至多不超过数十秒

- 新鲜数据(当天至过去几天)的查询和统计频率高,返回速度要快(毫秒级,至多几秒)

- 历史数据保留得越久越好。

针对这些需求,加上对成本控制的必要性,大家通常想到的第一个架构设计就是冷热数据分离。

冷热数据分离

冷热分离的概念比较好理解,热结点做数据的写入,保存近期热数据,冷数据定期迁移到冷数据结点,就这么简单。不过实际操作起来可能还是碰到一些具体需要考虑的细节问题。

- 冷热结点集群配置的JVM heap配置要差异化。热结点无需存放太多数据,对于heap的要求通常不是太高,在够用的情况下尽量配置小一点。可以配置在26GB左右甚至更小,而不是大多数人知道的经验值31GB。原因在于这个size的heap,可以启用

zero basedCompressed Oops,JVM运行效率是最高的, 参考: heap-size。而冷结点存在的目的是尽量放更多的数据,性能不是首要的,因此heap可以配置在31GB。 - 数据迁移过程有一定资源消耗,为避免对数据写入产生显著影响,通常定时在业务低峰期,日志产出量比较低的时候进行,比如半夜。

- 索引是否应该启用压缩,如何启用?最初我们对于热结点上的索引是不启用压缩的,为了节省CPU消耗。只在冷结点配置里,增加了索引压缩选项。这样索引迁移到冷结点后,执行force merge操作的时候,ES会自动将结点上配置的索引压缩属性套用到merge过后新生成的segment,这样就实现了热结点不压缩,冷结点merge过后压缩的功能。极大节省了冷结点的磁盘空间。后来随着硬件的升级,我们发现服务器的cpu基本都是过剩的,磁盘IO通常先到瓶颈。 因此尝试在热结点上一开始创建索引的时候,就启用压缩选项。实际对比测试并没有发现显著的索引吞吐量下降,并且因为索引压缩后磁盘文件size的大幅减少,每天夜间的数据迁移工作可以节省大量的时间。至此我们的日志集群索引默认就是压缩的。

- 冷结点上留做系统缓存的内存一般不多,加上数据量非常巨大。索引默认的mmapfs读取方式,很容易因为系统缓存不够,导致数据在内存和磁盘之间频繁换入换出。严重的情况下,整个结点甚至会因为io持续在100%无法响应。 实践中我们发现对冷结点使用niofs效果会更好。

实现了冷热结点分离以后,集群的资源利用率提升了不少,可管理性也要好很多了. 但是随着接入日志的类型越来越多(我们生产上有差不多400种类型的日志),各种日志的速率差异又很大,让ES自己管理shard的分布很容易产生写入热点问题。 针对这个问题,可以采用对集群结点进行分组管理的策略来解决。

热结点分组管理

所谓分组管理,就是通过在结点的配置文件中增加自定义的标签属性,将服务器区分到不同的组别中。然后通过设置索引的index.routing.allocation.include属性,控制改索引分布在哪个组别。同时配合设置index.routing.allocation.total_shards_per_node,可以做到某个索引的shard在某个group的结点之间绝对均匀分布。

比如一个分组有10台机器,对一个5 primary ,1 replica的索引,让该索引分布在该分组的同时,设置total_shards_per_node为1,让每个节点上只能有一个分片,这样就避免了写入热点问题。 该方案的缺陷也显而易见,一旦有结点挂掉,不会自动recovery,某个shard将一直处于unassigned状态,集群状态变成yellow。 但我认为,热数据的恢复开销是非常高的,与其立即在其他结点开始复制,之后再重新rebalance,不如就让集群暂时处于yellow状态。 通过监控报警的手段,及时通知运维人员解决结点故障。 待故障解决之后,直接从恢复后的结点开始数据复制,开销要低得多。

在我们的生产环境主要有两种类型的结点分组,分别是10台机器一个分组,和2台机器一个分组。10台机器的分组用于应对速率非常高,shard划分比较多的索引,2台机器的分组用于速率很低,一个shard(加一个复制片)就可以应对的索引。

这种分组策略在我们的生产环境中经过验证,非常好的解决了写入热点问题。那么冷数据怎么管理? 冷数据不做写入,不存在写入热点问题,查询频率也比较低,业务需求方面对查询响应要求也不那么严苛,所以查询热点问题也不是那么突出。因此为了简化管理,冷结点我们是不做shard分布的精细控制,所有数据迁移到冷数据结点之后,由ES默认的shard分布则略去控制数据的分布。

不过如果想进一步提高冷数据结点服务器资源的利用率,还是可以有进一步挖掘的的空间。我们知道ES默认的shard分布策略,只是保证一个索引的shard尽量分布在不同的结点,同时保证每个节点上shard数量差不多。但是如果采用默认按天创建索引的策略,由于索引速率差异很大,不同索引之间shard的大小差异可能是1-2个数量级的。如果每个shard的size差异不大就好了,那么默认的分布策略,基本上可以保证冷结点之间数据量分布的大致均匀。 能实现类似功能的是ES的rollover特性。

索引的Rollover

Rollover api可以让索引根据预先定义的时间跨度,或者索引大小来自动切分出新索引,从而将索引的大小控制在计划的范围内。合理的应用rollver api可以保证集群shard大小差别不会太大。 只是集群索引类别比较多的时候,rollover全部手动管理负担比较大,需要借助额外的管理工具和监控工具。我们出于管理简便的考虑,暂时没有应用到这个特性。

索引的Rollup

我们发现生产有些用户写入的“日志”,实际上是多维的metrcis数据,使用的时候不是为了查询日志的详情,仅仅是为了做各种维度组合的过滤和聚合。对于这种类型的数据,保留历史数据过多一来浪费存储空间,二来每次聚合都要在裸数据上跑,非常浪费资源。 ES从6.3开始,在x-pack里推出了rollup api,通过定期对裸数据做预先聚合,大大缩减了保存在磁盘上的数据量。对于不需要查询裸日志的应用场景,合理应用该特性,可以将历史数据的磁盘消耗降低几个数量级,同时rollup search也可以大大提升聚合速度。不过rollup也有其局限性,即他的实现是通过定期任务,对间隔期数据跑聚合完成的,有一定的计算开销。 如果数据写入速率非常高,集群压力很大,rollup可能无法跟上写入速率,而不具有实用性。 所以实际环境中,还是需要根据应用场景和资源使用情况,进行灵活的取舍。

多集群的便利性

数据量大到一定程度以后,单集群由于master node单点的限制,会遇到各种集群状态数据更新时得性能问题。 由此现在一些大规模的应用已经开始利用到多集群互联和cross cluster search的特性。 这种结构除了解决单集群数据容量限制问题以外,我们还发现在做容量均衡方面还有比较好的便利性。应用日志写入量通常随着业务变化也会剧烈变化,好不容易规划好的容量,不久就被业务的增长给打破,数倍或者数10倍的流量增长很可能就让一组结点过载出现写入延迟。 如果只有一个集群,在结点之间重新平衡shard比较费力,涉及到数据的迁移,可能非常缓慢,还会影响写入。 但如果有多集群互联,切换就可以做到非常的快速和简单。 原理上只需要在新集群中加入对应的索引配置模版,然后更新写入程序的配置,写入目标指向新集群,重启写入程序即可。并且,可以进一步将整个流程工具化,在GUI上完成一键切换。

近两年随着Elastic Stack的愈发火热,其近乎成为构建实时日志应用的工业标准。在小型数据应用场景,最新的6.5版本已经可以做到开箱即用,无需过多考虑架构上的设计工作。 然而一旦应用规模扩大到数百TB甚至PB的数据量级,整个系统的架构和后期维护工作则显得非常重要。借着2018 Elastic Advent写文的机会,结合过去几年架构和运维公司日志集群的实践经验,对于大规模日志型数据的管理策略,在此做一个总结性的思考。 文中抛出的观点,有些已经在我们的集群中有所应用并取得比较好的效果,有些则还待实践的检验。抛砖引玉,不尽成熟的地方,还请社区各位专家指正。

对于日志系统,最终用户通常有以下几个基本要求:

- 数据从产生到可检索的实时性要求高,可接受的延迟通常要求控制在数秒,至多不超过数十秒

- 新鲜数据(当天至过去几天)的查询和统计频率高,返回速度要快(毫秒级,至多几秒)

- 历史数据保留得越久越好。

针对这些需求,加上对成本控制的必要性,大家通常想到的第一个架构设计就是冷热数据分离。

冷热数据分离

冷热分离的概念比较好理解,热结点做数据的写入,保存近期热数据,冷数据定期迁移到冷数据结点,就这么简单。不过实际操作起来可能还是碰到一些具体需要考虑的细节问题。

- 冷热结点集群配置的JVM heap配置要差异化。热结点无需存放太多数据,对于heap的要求通常不是太高,在够用的情况下尽量配置小一点。可以配置在26GB左右甚至更小,而不是大多数人知道的经验值31GB。原因在于这个size的heap,可以启用

zero basedCompressed Oops,JVM运行效率是最高的, 参考: heap-size。而冷结点存在的目的是尽量放更多的数据,性能不是首要的,因此heap可以配置在31GB。 - 数据迁移过程有一定资源消耗,为避免对数据写入产生显著影响,通常定时在业务低峰期,日志产出量比较低的时候进行,比如半夜。

- 索引是否应该启用压缩,如何启用?最初我们对于热结点上的索引是不启用压缩的,为了节省CPU消耗。只在冷结点配置里,增加了索引压缩选项。这样索引迁移到冷结点后,执行force merge操作的时候,ES会自动将结点上配置的索引压缩属性套用到merge过后新生成的segment,这样就实现了热结点不压缩,冷结点merge过后压缩的功能。极大节省了冷结点的磁盘空间。后来随着硬件的升级,我们发现服务器的cpu基本都是过剩的,磁盘IO通常先到瓶颈。 因此尝试在热结点上一开始创建索引的时候,就启用压缩选项。实际对比测试并没有发现显著的索引吞吐量下降,并且因为索引压缩后磁盘文件size的大幅减少,每天夜间的数据迁移工作可以节省大量的时间。至此我们的日志集群索引默认就是压缩的。

- 冷结点上留做系统缓存的内存一般不多,加上数据量非常巨大。索引默认的mmapfs读取方式,很容易因为系统缓存不够,导致数据在内存和磁盘之间频繁换入换出。严重的情况下,整个结点甚至会因为io持续在100%无法响应。 实践中我们发现对冷结点使用niofs效果会更好。

实现了冷热结点分离以后,集群的资源利用率提升了不少,可管理性也要好很多了. 但是随着接入日志的类型越来越多(我们生产上有差不多400种类型的日志),各种日志的速率差异又很大,让ES自己管理shard的分布很容易产生写入热点问题。 针对这个问题,可以采用对集群结点进行分组管理的策略来解决。

热结点分组管理

所谓分组管理,就是通过在结点的配置文件中增加自定义的标签属性,将服务器区分到不同的组别中。然后通过设置索引的index.routing.allocation.include属性,控制改索引分布在哪个组别。同时配合设置index.routing.allocation.total_shards_per_node,可以做到某个索引的shard在某个group的结点之间绝对均匀分布。

比如一个分组有10台机器,对一个5 primary ,1 replica的索引,让该索引分布在该分组的同时,设置total_shards_per_node为1,让每个节点上只能有一个分片,这样就避免了写入热点问题。 该方案的缺陷也显而易见,一旦有结点挂掉,不会自动recovery,某个shard将一直处于unassigned状态,集群状态变成yellow。 但我认为,热数据的恢复开销是非常高的,与其立即在其他结点开始复制,之后再重新rebalance,不如就让集群暂时处于yellow状态。 通过监控报警的手段,及时通知运维人员解决结点故障。 待故障解决之后,直接从恢复后的结点开始数据复制,开销要低得多。

在我们的生产环境主要有两种类型的结点分组,分别是10台机器一个分组,和2台机器一个分组。10台机器的分组用于应对速率非常高,shard划分比较多的索引,2台机器的分组用于速率很低,一个shard(加一个复制片)就可以应对的索引。

这种分组策略在我们的生产环境中经过验证,非常好的解决了写入热点问题。那么冷数据怎么管理? 冷数据不做写入,不存在写入热点问题,查询频率也比较低,业务需求方面对查询响应要求也不那么严苛,所以查询热点问题也不是那么突出。因此为了简化管理,冷结点我们是不做shard分布的精细控制,所有数据迁移到冷数据结点之后,由ES默认的shard分布则略去控制数据的分布。

不过如果想进一步提高冷数据结点服务器资源的利用率,还是可以有进一步挖掘的的空间。我们知道ES默认的shard分布策略,只是保证一个索引的shard尽量分布在不同的结点,同时保证每个节点上shard数量差不多。但是如果采用默认按天创建索引的策略,由于索引速率差异很大,不同索引之间shard的大小差异可能是1-2个数量级的。如果每个shard的size差异不大就好了,那么默认的分布策略,基本上可以保证冷结点之间数据量分布的大致均匀。 能实现类似功能的是ES的rollover特性。

索引的Rollover

Rollover api可以让索引根据预先定义的时间跨度,或者索引大小来自动切分出新索引,从而将索引的大小控制在计划的范围内。合理的应用rollver api可以保证集群shard大小差别不会太大。 只是集群索引类别比较多的时候,rollover全部手动管理负担比较大,需要借助额外的管理工具和监控工具。我们出于管理简便的考虑,暂时没有应用到这个特性。

索引的Rollup

我们发现生产有些用户写入的“日志”,实际上是多维的metrcis数据,使用的时候不是为了查询日志的详情,仅仅是为了做各种维度组合的过滤和聚合。对于这种类型的数据,保留历史数据过多一来浪费存储空间,二来每次聚合都要在裸数据上跑,非常浪费资源。 ES从6.3开始,在x-pack里推出了rollup api,通过定期对裸数据做预先聚合,大大缩减了保存在磁盘上的数据量。对于不需要查询裸日志的应用场景,合理应用该特性,可以将历史数据的磁盘消耗降低几个数量级,同时rollup search也可以大大提升聚合速度。不过rollup也有其局限性,即他的实现是通过定期任务,对间隔期数据跑聚合完成的,有一定的计算开销。 如果数据写入速率非常高,集群压力很大,rollup可能无法跟上写入速率,而不具有实用性。 所以实际环境中,还是需要根据应用场景和资源使用情况,进行灵活的取舍。

多集群的便利性

数据量大到一定程度以后,单集群由于master node单点的限制,会遇到各种集群状态数据更新时得性能问题。 由此现在一些大规模的应用已经开始利用到多集群互联和cross cluster search的特性。 这种结构除了解决单集群数据容量限制问题以外,我们还发现在做容量均衡方面还有比较好的便利性。应用日志写入量通常随着业务变化也会剧烈变化,好不容易规划好的容量,不久就被业务的增长给打破,数倍或者数10倍的流量增长很可能就让一组结点过载出现写入延迟。 如果只有一个集群,在结点之间重新平衡shard比较费力,涉及到数据的迁移,可能非常缓慢,还会影响写入。 但如果有多集群互联,切换就可以做到非常的快速和简单。 原理上只需要在新集群中加入对应的索引配置模版,然后更新写入程序的配置,写入目标指向新集群,重启写入程序即可。并且,可以进一步将整个流程工具化,在GUI上完成一键切换。

收起阅读 »社区日报 第481期 (2018-12-17)

http://t.cn/EUn1Wa2

2.ElasticSearch 和 lucene 本周发展详情

http://t.cn/EUnBPRV

3.Elasticsearch 自动在文档中添加时间戳

http://t.cn/EUfESt7

编辑:cyberdak

归档:https://elasticsearch.cn/article/6204

订阅:https://tinyletter.com/elastic-daily

http://t.cn/EUn1Wa2

2.ElasticSearch 和 lucene 本周发展详情

http://t.cn/EUnBPRV

3.Elasticsearch 自动在文档中添加时间戳

http://t.cn/EUfESt7

编辑:cyberdak

归档:https://elasticsearch.cn/article/6204

订阅:https://tinyletter.com/elastic-daily 收起阅读 »

【2019.01.12】OpenResty x Open Talk 丨深圳站

一、活动介绍

OpenResty 是一个基于 Nginx 与 Lua 的高性能 Web 平台,其内部集成了大量精良的 Lua 库、第三方模块以及大多数的依赖项。用于方便地搭建能够处理超高并发、扩展性极高的动态 Web 应用、Web 服务和动态网关。目前 OpenResty 是全球占有率排名第五的 Web 服务器,超过 1 万多家商业公司都在使用 OpenResty,比如又拍云、腾讯、奇虎360、京东、12306、Kong 等公司,应用在 CDN、广告系统、搜索、实现业务逻辑、余票查询、网关、ingress controller 等各种不同的场景下。

又拍云 Open Talk 是由又拍云发起的系列主题分享沙龙,秉承又拍云帮助企业提升发展速度的初衷,从 2015 年开启以来,Open Talk 至今已成功举办 45 期,辐射线上线下近十万技术人群,分别在北京、上海、广州、深圳、杭州等 12 座城市举办,覆盖腾讯、华为、网易、京东、唯品会、哔哩哔哩、美团点评、有赞、无码科技等诸多知名企业,往期的活动的讲稿及视频详见:https://opentalk.upyun.com。

为促进 OpenResty 在技术圈的发展,增进 OpenResty 使用者的交流与学习,OpenResty 中国社区联合又拍云,举办 “OpenResty × Open Talk” 全国巡回沙龙,邀请业内资深的 OpenResty 技术专家,分享 OpenResty 实战经验,推动 OpenResty 开源项目的发展,促进互联网技术的教育。

二、活动时间

2019 年 01 月 12 日(周六)

三、活动地址

广东省深圳市南山区深圳虚拟大学园 R3-B 栋一楼触梦社区

四、报名地址

http://www.huodongxing.com/event/5470242889300

五、嘉宾及议题介绍

分享嘉宾一:温铭 / OpenResty 软件基金会主席

OpenResty 软件基金会主席,前360开源委员会委员;现为 OpenResty Inc. 合伙人,致力于打造基于 OpenResty、Linux 内核等基础平台的企业级产品和解决方案。创业之前在互联网安全公司工作了 10 年,主要从事服务端的开发和架构,负责开发过木马云查杀、反钓鱼系统和企业安全产品。

分享主题:《 使用 OpenResty 实现 memcached server 》

OpenResty 软件基金会主席,前360开源委员会委员;现为 OpenResty Inc. 合伙人,致力于打造基于 OpenResty、Linux 内核等基础平台的企业级产品和解决方案。创业之前在互联网安全公司工作了 10 年,主要从事服务端的开发和架构,负责开发过木马云查杀、反钓鱼系统和企业安全产品。

分享嘉宾二:张聪 / 又拍云首席架构师

多年 CDN 行业产品设计、技术开发和团队管理相关经验,个人技术方向集中在 Nginx、OpenResty 等高性能 Web 服务器方面,国内 OpenResty 技术早期推广者之一;目前担任又拍云内容加速部技术负责人,主导又拍云 CDN 技术平台的建设和发展。

分享主题:《 OpenResty 动态流控的几种姿势 》

本次分享主要介绍 OpenResty 上几种常用的请求限制和流量整形的方式,特别值得一提的是,详细介绍在又拍云网关层的实际应用案例,以及又拍云贡献的开源 Resty 库。

分享嘉宾三:张波 / 虎牙直播运维研发架构师

目前主要负责虎牙直播运维体系的建设,主要针对 web 和后台类程序的发布、监控、运维自动化相关的运维系统的设计和开发。

分享主题:《 掘金 Nginx 日志 》

Nginx 是现在最流行的负载均衡和反向代理服务器之一,如果你是一位中小微型网站的开发运维人员,可能仅 Nginx 每天就会产生上百 M 甚至数以十 G 的日志文件,Nginx 日志被删除以前,或者我们可以想想,其中是否蕴含着位置的金矿等待挖掘?

分享嘉宾四:陈乾龙 / 京东微信手 Q 业务部

负责微信、手Q抢购后台,以及 OpenResty 入口流量的网关

分享主题:《 基于 OpenResty 流量的防刷及容灾 》

本次分享主要介绍基于 OpenResty 搭建流量网关的实践以及开发过程中碰到的问题及优化。

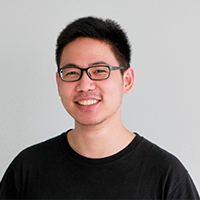

六、现场礼品

一、活动介绍

OpenResty 是一个基于 Nginx 与 Lua 的高性能 Web 平台,其内部集成了大量精良的 Lua 库、第三方模块以及大多数的依赖项。用于方便地搭建能够处理超高并发、扩展性极高的动态 Web 应用、Web 服务和动态网关。目前 OpenResty 是全球占有率排名第五的 Web 服务器,超过 1 万多家商业公司都在使用 OpenResty,比如又拍云、腾讯、奇虎360、京东、12306、Kong 等公司,应用在 CDN、广告系统、搜索、实现业务逻辑、余票查询、网关、ingress controller 等各种不同的场景下。

又拍云 Open Talk 是由又拍云发起的系列主题分享沙龙,秉承又拍云帮助企业提升发展速度的初衷,从 2015 年开启以来,Open Talk 至今已成功举办 45 期,辐射线上线下近十万技术人群,分别在北京、上海、广州、深圳、杭州等 12 座城市举办,覆盖腾讯、华为、网易、京东、唯品会、哔哩哔哩、美团点评、有赞、无码科技等诸多知名企业,往期的活动的讲稿及视频详见:https://opentalk.upyun.com。

为促进 OpenResty 在技术圈的发展,增进 OpenResty 使用者的交流与学习,OpenResty 中国社区联合又拍云,举办 “OpenResty × Open Talk” 全国巡回沙龙,邀请业内资深的 OpenResty 技术专家,分享 OpenResty 实战经验,推动 OpenResty 开源项目的发展,促进互联网技术的教育。

二、活动时间

2019 年 01 月 12 日(周六)

三、活动地址

广东省深圳市南山区深圳虚拟大学园 R3-B 栋一楼触梦社区

四、报名地址

http://www.huodongxing.com/event/5470242889300

五、嘉宾及议题介绍

分享嘉宾一:温铭 / OpenResty 软件基金会主席

OpenResty 软件基金会主席,前360开源委员会委员;现为 OpenResty Inc. 合伙人,致力于打造基于 OpenResty、Linux 内核等基础平台的企业级产品和解决方案。创业之前在互联网安全公司工作了 10 年,主要从事服务端的开发和架构,负责开发过木马云查杀、反钓鱼系统和企业安全产品。

分享主题:《 使用 OpenResty 实现 memcached server 》

OpenResty 软件基金会主席,前360开源委员会委员;现为 OpenResty Inc. 合伙人,致力于打造基于 OpenResty、Linux 内核等基础平台的企业级产品和解决方案。创业之前在互联网安全公司工作了 10 年,主要从事服务端的开发和架构,负责开发过木马云查杀、反钓鱼系统和企业安全产品。

分享嘉宾二:张聪 / 又拍云首席架构师

多年 CDN 行业产品设计、技术开发和团队管理相关经验,个人技术方向集中在 Nginx、OpenResty 等高性能 Web 服务器方面,国内 OpenResty 技术早期推广者之一;目前担任又拍云内容加速部技术负责人,主导又拍云 CDN 技术平台的建设和发展。

分享主题:《 OpenResty 动态流控的几种姿势 》

本次分享主要介绍 OpenResty 上几种常用的请求限制和流量整形的方式,特别值得一提的是,详细介绍在又拍云网关层的实际应用案例,以及又拍云贡献的开源 Resty 库。

分享嘉宾三:张波 / 虎牙直播运维研发架构师

目前主要负责虎牙直播运维体系的建设,主要针对 web 和后台类程序的发布、监控、运维自动化相关的运维系统的设计和开发。

分享主题:《 掘金 Nginx 日志 》

Nginx 是现在最流行的负载均衡和反向代理服务器之一,如果你是一位中小微型网站的开发运维人员,可能仅 Nginx 每天就会产生上百 M 甚至数以十 G 的日志文件,Nginx 日志被删除以前,或者我们可以想想,其中是否蕴含着位置的金矿等待挖掘?

分享嘉宾四:陈乾龙 / 京东微信手 Q 业务部

负责微信、手Q抢购后台,以及 OpenResty 入口流量的网关

分享主题:《 基于 OpenResty 流量的防刷及容灾 》

本次分享主要介绍基于 OpenResty 搭建流量网关的实践以及开发过程中碰到的问题及优化。

六、现场礼品

Day 16 - Elasticsearch性能调优

因为总是看到很多同学在说elasticsearch性能不够好,集群不够稳定,询问关于elasticsearch的调优,但是每次都是一个个点的单独讲,很多时候都是case by case的解答,今天简单梳理下日常的elasticsearch使用调优,以下仅为自己日常经验之谈,如有疏漏,还请大家帮忙指正。

一、配置文件调优

elasticsearch.yml

内存锁定

bootstrap.memory_lock:true 允许 JVM 锁住内存,禁止操作系统交换出去。

zen.discovery

Elasticsearch 默认被配置为使用单播发现,以防止节点无意中加入集群。组播发现应该永远不被使用在生产环境了,否则你得到的结果就是一个节点意外的加入到了你的生产环境,仅仅是因为他们收到了一个错误的组播信号。 ES是一个P2P类型的分布式系统,使用gossip协议,集群的任意请求都可以发送到集群的任一节点,然后es内部会找到需要转发的节点,并且与之进行通信。 在es1.x的版本,es默认是开启组播,启动es之后,可以快速将局域网内集群名称,默认端口的相同实例加入到一个大的集群,后续再es2.x之后,都调整成了单播,避免安全问题和网络风暴; 单播discovery.zen.ping.unicast.hosts,建议写入集群内所有的节点及端口,如果新实例加入集群,新实例只需要写入当前集群的实例,即可自动加入到当前集群,之后再处理原实例的配置即可,新实例加入集群,不需要重启原有实例; 节点zen相关配置: discovery.zen.ping_timeout:判断master选举过程中,发现其他node存活的超时设置,主要影响选举的耗时,参数仅在加入或者选举 master 主节点的时候才起作用 discovery.zen.join_timeout:节点确定加入到集群中,向主节点发送加入请求的超时时间,默认为3s discovery.zen.minimum_master_nodes:参与master选举的最小节点数,当集群能够被选为master的节点数量小于最小数量时,集群将无法正常选举。

故障检测( fault detection )

两种情况下回进行故障检测,第一种是由master向集群的所有其他节点发起ping,验证节点是否处于活动状态;第二种是:集群每个节点向master发起ping,判断master是否存活,是否需要发起选举。 故障检测需要配置以下设置使用 形如: discovery.zen.fd.ping_interval 节点被ping的频率,默认为1s。 discovery.zen.fd.ping_timeout 等待ping响应的时间,默认为 30s,运行的集群中,master 检测所有节点,以及节点检测 master 是否正常。 discovery.zen.fd.ping_retries ping失败/超时多少导致节点被视为失败,默认为3。

https://www.elastic.co/guide/en/elasticsearch/reference/6.x/modules-discovery-zen.html

队列数量

不建议盲目加大es的队列数量,如果是偶发的因为数据突增,导致队列阻塞,加大队列size可以使用内存来缓存数据,如果是持续性的数据阻塞在队列,加大队列size除了加大内存占用,并不能有效提高数据写入速率,反而可能加大es宕机时候,在内存中可能丢失的上数据量。 哪些情况下,加大队列size呢?GET /_cat/thread_pool,观察api中返回的queue和rejected,如果确实存在队列拒绝或者是持续的queue,可以酌情调整队列size。

https://www.elastic.co/guide/en/elasticsearch/reference/6.x/modules-threadpool.html

内存使用

设置indices的内存熔断相关参数,根据实际情况进行调整,防止写入或查询压力过高导致OOM, indices.breaker.total.limit: 50%,集群级别的断路器,默认为jvm堆的70%; indices.breaker.request.limit: 10%,单个request的断路器限制,默认为jvm堆的60%; indices.breaker.fielddata.limit: 10%,fielddata breaker限制,默认为jvm堆的60%。

https://www.elastic.co/guide/en/elasticsearch/reference/6.x/circuit-breaker.html

根据实际情况调整查询占用cache,避免查询cache占用过多的jvm内存,参数为静态的,需要在每个数据节点配置。 indices.queries.cache.size: 5%,控制过滤器缓存的内存大小,默认为10%。接受百分比值,5%或者精确值,例如512mb。

https://www.elastic.co/guide/en/elasticsearch/reference/6.x/query-cache.html

创建shard

如果集群规模较大,可以阻止新建shard时扫描集群内全部shard的元数据,提升shard分配速度。 cluster.routing.allocation.disk.include_relocations: false,默认为true。

https://www.elastic.co/guide/en/elasticsearch/reference/6.x/disk-allocator.html

二、系统层面调优

jdk版本

当前根据官方建议,选择匹配的jdk版本;

jdk内存配置

首先,-Xms和-Xmx设置为相同的值,避免在运行过程中再进行内存分配,同时,如果系统内存小于64G,建议设置略小于机器内存的一半,剩余留给系统使用。 同时,jvm heap建议不要超过32G(不同jdk版本具体的值会略有不同),否则jvm会因为内存指针压缩导致内存浪费,详见: https://www.elastic.co/guide/cn/elasticsearch/guide/current/heap-sizing.html

交换分区

关闭交换分区,防止内存发生交换导致性能下降(部分情况下,宁死勿慢) swapoff -a

文件句柄

Lucene 使用了 大量的 文件。 同时,Elasticsearch 在节点和 HTTP 客户端之间进行通信也使用了大量的套接字,所有这一切都需要足够的文件描述符,默认情况下,linux默认运行单个进程打开1024个文件句柄,这显然是不够的,故需要加大文件句柄数 ulimit -n 65536

https://www.elastic.co/guide/en/elasticsearch/reference/6.5/setting-system-settings.html

mmap

Elasticsearch 对各种文件混合使用了 NioFs( 注:非阻塞文件系统)和 MMapFs ( 注:内存映射文件系统)。请确保你配置的最大映射数量,以便有足够的虚拟内存可用于 mmapped 文件。这可以暂时设置: sysctl -w vm.max_map_count=262144 或者你可以在 /etc/sysctl.conf 通过修改 vm.max_map_count 永久设置它。

https://www.elastic.co/guide/cn/elasticsearch/guide/current/_file_descriptors_and_mmap.html

磁盘

如果你正在使用 SSDs,确保你的系统 I/O 调度程序是配置正确的。 当你向硬盘写数据,I/O 调度程序决定何时把数据实际发送到硬盘。 大多数默认 nix 发行版下的调度程序都叫做 cfq(完全公平队列)。但它是为旋转介质优化的: 机械硬盘的固有特性意味着它写入数据到基于物理布局的硬盘会更高效。

这对 SSD 来说是低效的,尽管这里没有涉及到机械硬盘。但是,deadline 或者 noop 应该被使用。deadline 调度程序基于写入等待时间进行优化, noop 只是一个简单的 FIFO 队列。

echo noop > /sys/block/sd/queue/scheduler

磁盘挂载

mount -o noatime,data=writeback,barrier=0,nobh /dev/sd* /esdata*

其中,noatime,禁止记录访问时间戳;data=writeback,不记录journal;barrier=0,因为关闭了journal,所以同步关闭barrier;

nobh,关闭buffer_head,防止内核影响数据IO

磁盘其他注意事项

使用 RAID 0。条带化 RAID 会提高磁盘I/O,代价显然就是当一块硬盘故障时整个就故障了,不要使用镜像或者奇偶校验 RAID 因为副本已经提供了这个功能。 另外,使用多块硬盘,并允许 Elasticsearch 通过多个 path.data 目录配置把数据条带化分配到它们上面。 不要使用远程挂载的存储,比如 NFS 或者 SMB/CIFS。这个引入的延迟对性能来说完全是背道而驰的。

三、elasticsearch使用方式调优

当elasticsearch本身的配置没有明显的问题之后,发现es使用还是非常慢,这个时候,就需要我们去定位es本身的问题了,首先祭出定位问题的第一个命令:

hot_threads

GET /_nodes/hot_threads&interval=30s

抓取30s的节点上占用资源的热线程,并通过排查占用资源最多的TOP线程来判断对应的资源消耗是否正常,一般情况下,bulk,search类的线程占用资源都可能是业务造成的,但是如果是merge线程占用了大量的资源,就应该考虑是不是创建index或者刷磁盘间隔太小,批量写入size太小造成的。

https://www.elastic.co/guide/en/elasticsearch/reference/6.x/cluster-nodes-hot-threads.html

pending_tasks

GET /_cluster/pending_tasks

有一些任务只能由主节点去处理,比如创建一个新的 索引或者在集群中移动分片,由于一个集群中只能有一个主节点,所以只有这一master节点可以处理集群级别的元数据变动。在99.9999%的时间里,这不会有什么问题,元数据变动的队列基本上保持为零。在一些罕见的集群里,元数据变动的次数比主节点能处理的还快,这会导致等待中的操作会累积成队列。这个时候可以通过pending_tasks api分析当前什么操作阻塞了es的队列,比如,集群异常时,会有大量的shard在recovery,如果集群在大量创建新字段,会出现大量的put_mappings的操作,所以正常情况下,需要禁用动态mapping。

https://www.elastic.co/guide/en/elasticsearch/reference/current/cluster-pending.html

字段存储

当前es主要有doc_values,fielddata,storefield三种类型,大部分情况下,并不需要三种类型都存储,可根据实际场景进行调整: 当前用得最多的就是doc_values,列存储,对于不需要进行分词的字段,都可以开启doc_values来进行存储(且只保留keyword字段),节约内存,当然,开启doc_values会对查询性能有一定的影响,但是,这个性能损耗是比较小的,而且是值得的;

fielddata构建和管理 100% 在内存中,常驻于 JVM 内存堆,所以可用于快速查询,但是这也意味着它本质上是不可扩展的,有很多边缘情况下要提防,如果对于字段没有分析需求,可以关闭fielddata;

storefield主要用于_source字段,默认情况下,数据在写入es的时候,es会将doc数据存储为_source字段,查询时可以通过_source字段快速获取doc的原始结构,如果没有update,reindex等需求,可以将_source字段disable;

_all,ES在6.x以前的版本,默认将写入的字段拼接成一个大的字符串,并对该字段进行分词,用于支持整个doc的全文检索,在知道doc字段名称的情况下,建议关闭掉该字段,节约存储空间,也避免不带字段key的全文检索;

norms:搜索时进行评分,日志场景一般不需要评分,建议关闭;

tranlog

Elasticsearch 2.0之后为了保证不丢数据,每次 index、bulk、delete、update 完成的时候,一定触发刷新 translog 到磁盘上,才给请求返回 200 OK。这个改变在提高数据安全性的同时当然也降低了一点性能。 如果你不在意这点可能性,还是希望性能优先,可以在 index template 里设置如下参数:

{

"index.translog.durability": "async"

}index.translog.sync_interval:对于一些大容量的偶尔丢失几秒数据问题也并不严重的集群,使用异步的 fsync 还是比较有益的。比如,写入的数据被缓存到内存中,再每5秒执行一次 fsync ,默认为5s。小于的值100ms是不允许的。 index.translog.flush_threshold_size:translog存储尚未安全保存在Lucene中的所有操作。虽然这些操作可用于读取,但如果要关闭并且必须恢复,则需要重新编制索引。此设置控制这些操作的最大总大小,以防止恢复时间过长。达到设置的最大size后,将发生刷新,生成新的Lucene提交点,默认为512mb。

refresh_interval

执行刷新操作的频率,这会使索引的最近更改对搜索可见,默认为1s,可以设置-1为禁用刷新,对于写入速率要求较高的场景,可以适当的加大对应的时长,减小磁盘io和segment的生成;

禁止动态mapping

动态mapping的坏处: 1.造成集群元数据一直变更,导致集群不稳定; 2.可能造成数据类型与实际类型不一致; 3.对于一些异常字段或者是扫描类的字段,也会频繁的修改mapping,导致业务不可控。 动态mapping配置的可选值及含义如下: true:支持动态扩展,新增数据有新的字段属性时,自动添加对于的mapping,数据写入成功 false:不支持动态扩展,新增数据有新的字段属性时,直接忽略,数据写入成功 strict:不支持动态扩展,新增数据有新的字段时,报错,数据写入失败

批量写入

批量请求显然会大大提升写入速率,且这个速率是可以量化的,官方建议每次批量的数据物理字节数5-15MB是一个比较不错的起点,注意这里说的是物理字节数大小。文档计数对批量大小来说不是一个好指标。比如说,如果你每次批量索引 1000 个文档,记住下面的事实: 1000 个 1 KB 大小的文档加起来是 1 MB 大。 1000 个 100 KB 大小的文档加起来是 100 MB 大。 这可是完完全全不一样的批量大小了。批量请求需要在协调节点上加载进内存,所以批量请求的物理大小比文档计数重要得多。 从 5–15 MB 开始测试批量请求大小,缓慢增加这个数字,直到你看不到性能提升为止。然后开始增加你的批量写入的并发度(多线程等等办法)。 用iostat 、 top 和 ps 等工具监控你的节点,观察资源什么时候达到瓶颈。如果你开始收到 EsRejectedExecutionException ,你的集群没办法再继续了:至少有一种资源到瓶颈了。或者减少并发数,或者提供更多的受限资源(比如从机械磁盘换成 SSD),或者添加更多节点。

索引和shard

es的索引,shard都会有对应的元数据,且因为es的元数据都是保存在master节点,且元数据的更新是要hang住集群向所有节点同步的,当es的新建字段或者新建索引的时候,都会要获取集群元数据,并对元数据进行变更及同步,此时会影响集群的响应,所以需要关注集群的index和shard数量,建议如下: 1.使用shrink和rollover api,相对生成合适的数据shard数; 2.根据数据量级及对应的性能需求,选择创建index的名称,形如:按月生成索引:test-YYYYMM,按天生成索引:test-YYYYMMDD; 3.控制单个shard的size,正常情况下,日志场景,建议单个shard不大于50GB,线上业务场景,建议单个shard不超过20GB;

segment merge

段合并的计算量庞大, 而且还要吃掉大量磁盘 I/O。合并在后台定期操作,因为他们可能要很长时间才能完成,尤其是比较大的段。这个通常来说都没问题,因为大规模段合并的概率是很小的。

如果发现merge占用了大量的资源,可以设置:

index.merge.scheduler.max_thread_count: 1

特别是机械磁盘在并发 I/O 支持方面比较差,所以我们需要降低每个索引并发访问磁盘的线程数。这个设置允许 max_thread_count + 2 个线程同时进行磁盘操作,也就是设置为 1 允许三个线程。

对于 SSD,你可以忽略这个设置,默认是 Math.min(3, Runtime.getRuntime().availableProcessors() / 2) ,对 SSD 来说运行的很好。

业务低峰期通过force_merge强制合并segment,降低segment的数量,减小内存消耗;

关闭冷索引,业务需要的时候再进行开启,如果一直不使用的索引,可以定期删除,或者备份到hadoop集群;

自动生成_id

当写入端使用特定的id将数据写入es时,es会去检查对应的index下是否存在相同的id,这个操作会随着文档数量的增加而消耗越来越大,所以如果业务上没有强需求,建议使用es自动生成的id,加快写入速率。

routing

对于数据量较大的业务查询场景,es侧一般会创建多个shard,并将shard分配到集群中的多个实例来分摊压力,正常情况下,一个查询会遍历查询所有的shard,然后将查询到的结果进行merge之后,再返回给查询端。此时,写入的时候设置routing,可以避免每次查询都遍历全量shard,而是查询的时候也指定对应的routingkey,这种情况下,es会只去查询对应的shard,可以大幅度降低合并数据和调度全量shard的开销。

使用alias

生产提供服务的索引,切记使用别名提供服务,而不是直接暴露索引名称,避免后续因为业务变更或者索引数据需要reindex等情况造成业务中断。

避免宽表

在索引中定义太多字段是一种可能导致映射爆炸的情况,这可能导致内存不足错误和难以恢复的情况,这个问题可能比预期更常见,index.mapping.total_fields.limit ,默认值是1000

避免稀疏索引

因为索引稀疏之后,对应的相邻文档id的delta值会很大,lucene基于文档id做delta编码压缩导致压缩率降低,从而导致索引文件增大,同时,es的keyword,数组类型采用doc_values结构,每个文档都会占用一定的空间,即使字段是空值,所以稀疏索引会造成磁盘size增大,导致查询和写入效率降低。

因为总是看到很多同学在说elasticsearch性能不够好,集群不够稳定,询问关于elasticsearch的调优,但是每次都是一个个点的单独讲,很多时候都是case by case的解答,今天简单梳理下日常的elasticsearch使用调优,以下仅为自己日常经验之谈,如有疏漏,还请大家帮忙指正。

一、配置文件调优

elasticsearch.yml

内存锁定

bootstrap.memory_lock:true 允许 JVM 锁住内存,禁止操作系统交换出去。

zen.discovery

Elasticsearch 默认被配置为使用单播发现,以防止节点无意中加入集群。组播发现应该永远不被使用在生产环境了,否则你得到的结果就是一个节点意外的加入到了你的生产环境,仅仅是因为他们收到了一个错误的组播信号。 ES是一个P2P类型的分布式系统,使用gossip协议,集群的任意请求都可以发送到集群的任一节点,然后es内部会找到需要转发的节点,并且与之进行通信。 在es1.x的版本,es默认是开启组播,启动es之后,可以快速将局域网内集群名称,默认端口的相同实例加入到一个大的集群,后续再es2.x之后,都调整成了单播,避免安全问题和网络风暴; 单播discovery.zen.ping.unicast.hosts,建议写入集群内所有的节点及端口,如果新实例加入集群,新实例只需要写入当前集群的实例,即可自动加入到当前集群,之后再处理原实例的配置即可,新实例加入集群,不需要重启原有实例; 节点zen相关配置: discovery.zen.ping_timeout:判断master选举过程中,发现其他node存活的超时设置,主要影响选举的耗时,参数仅在加入或者选举 master 主节点的时候才起作用 discovery.zen.join_timeout:节点确定加入到集群中,向主节点发送加入请求的超时时间,默认为3s discovery.zen.minimum_master_nodes:参与master选举的最小节点数,当集群能够被选为master的节点数量小于最小数量时,集群将无法正常选举。

故障检测( fault detection )

两种情况下回进行故障检测,第一种是由master向集群的所有其他节点发起ping,验证节点是否处于活动状态;第二种是:集群每个节点向master发起ping,判断master是否存活,是否需要发起选举。 故障检测需要配置以下设置使用 形如: discovery.zen.fd.ping_interval 节点被ping的频率,默认为1s。 discovery.zen.fd.ping_timeout 等待ping响应的时间,默认为 30s,运行的集群中,master 检测所有节点,以及节点检测 master 是否正常。 discovery.zen.fd.ping_retries ping失败/超时多少导致节点被视为失败,默认为3。

https://www.elastic.co/guide/en/elasticsearch/reference/6.x/modules-discovery-zen.html

队列数量

不建议盲目加大es的队列数量,如果是偶发的因为数据突增,导致队列阻塞,加大队列size可以使用内存来缓存数据,如果是持续性的数据阻塞在队列,加大队列size除了加大内存占用,并不能有效提高数据写入速率,反而可能加大es宕机时候,在内存中可能丢失的上数据量。 哪些情况下,加大队列size呢?GET /_cat/thread_pool,观察api中返回的queue和rejected,如果确实存在队列拒绝或者是持续的queue,可以酌情调整队列size。

https://www.elastic.co/guide/en/elasticsearch/reference/6.x/modules-threadpool.html

内存使用

设置indices的内存熔断相关参数,根据实际情况进行调整,防止写入或查询压力过高导致OOM, indices.breaker.total.limit: 50%,集群级别的断路器,默认为jvm堆的70%; indices.breaker.request.limit: 10%,单个request的断路器限制,默认为jvm堆的60%; indices.breaker.fielddata.limit: 10%,fielddata breaker限制,默认为jvm堆的60%。

https://www.elastic.co/guide/en/elasticsearch/reference/6.x/circuit-breaker.html

根据实际情况调整查询占用cache,避免查询cache占用过多的jvm内存,参数为静态的,需要在每个数据节点配置。 indices.queries.cache.size: 5%,控制过滤器缓存的内存大小,默认为10%。接受百分比值,5%或者精确值,例如512mb。

https://www.elastic.co/guide/en/elasticsearch/reference/6.x/query-cache.html

创建shard

如果集群规模较大,可以阻止新建shard时扫描集群内全部shard的元数据,提升shard分配速度。 cluster.routing.allocation.disk.include_relocations: false,默认为true。

https://www.elastic.co/guide/en/elasticsearch/reference/6.x/disk-allocator.html

二、系统层面调优

jdk版本

当前根据官方建议,选择匹配的jdk版本;

jdk内存配置

首先,-Xms和-Xmx设置为相同的值,避免在运行过程中再进行内存分配,同时,如果系统内存小于64G,建议设置略小于机器内存的一半,剩余留给系统使用。 同时,jvm heap建议不要超过32G(不同jdk版本具体的值会略有不同),否则jvm会因为内存指针压缩导致内存浪费,详见: https://www.elastic.co/guide/cn/elasticsearch/guide/current/heap-sizing.html

交换分区

关闭交换分区,防止内存发生交换导致性能下降(部分情况下,宁死勿慢) swapoff -a

文件句柄

Lucene 使用了 大量的 文件。 同时,Elasticsearch 在节点和 HTTP 客户端之间进行通信也使用了大量的套接字,所有这一切都需要足够的文件描述符,默认情况下,linux默认运行单个进程打开1024个文件句柄,这显然是不够的,故需要加大文件句柄数 ulimit -n 65536

https://www.elastic.co/guide/en/elasticsearch/reference/6.5/setting-system-settings.html

mmap

Elasticsearch 对各种文件混合使用了 NioFs( 注:非阻塞文件系统)和 MMapFs ( 注:内存映射文件系统)。请确保你配置的最大映射数量,以便有足够的虚拟内存可用于 mmapped 文件。这可以暂时设置: sysctl -w vm.max_map_count=262144 或者你可以在 /etc/sysctl.conf 通过修改 vm.max_map_count 永久设置它。

https://www.elastic.co/guide/cn/elasticsearch/guide/current/_file_descriptors_and_mmap.html

磁盘

如果你正在使用 SSDs,确保你的系统 I/O 调度程序是配置正确的。 当你向硬盘写数据,I/O 调度程序决定何时把数据实际发送到硬盘。 大多数默认 nix 发行版下的调度程序都叫做 cfq(完全公平队列)。但它是为旋转介质优化的: 机械硬盘的固有特性意味着它写入数据到基于物理布局的硬盘会更高效。

这对 SSD 来说是低效的,尽管这里没有涉及到机械硬盘。但是,deadline 或者 noop 应该被使用。deadline 调度程序基于写入等待时间进行优化, noop 只是一个简单的 FIFO 队列。

echo noop > /sys/block/sd/queue/scheduler

磁盘挂载

mount -o noatime,data=writeback,barrier=0,nobh /dev/sd* /esdata*

其中,noatime,禁止记录访问时间戳;data=writeback,不记录journal;barrier=0,因为关闭了journal,所以同步关闭barrier;

nobh,关闭buffer_head,防止内核影响数据IO

磁盘其他注意事项

使用 RAID 0。条带化 RAID 会提高磁盘I/O,代价显然就是当一块硬盘故障时整个就故障了,不要使用镜像或者奇偶校验 RAID 因为副本已经提供了这个功能。 另外,使用多块硬盘,并允许 Elasticsearch 通过多个 path.data 目录配置把数据条带化分配到它们上面。 不要使用远程挂载的存储,比如 NFS 或者 SMB/CIFS。这个引入的延迟对性能来说完全是背道而驰的。

三、elasticsearch使用方式调优

当elasticsearch本身的配置没有明显的问题之后,发现es使用还是非常慢,这个时候,就需要我们去定位es本身的问题了,首先祭出定位问题的第一个命令:

hot_threads