按照官方的做法 做平滑重启,关闭 shard allocation,然后关闭节点,改配置再重启,再打开allocation,等待数秒分片恢复集群达到green,再重复这个过程重启下一个节点。

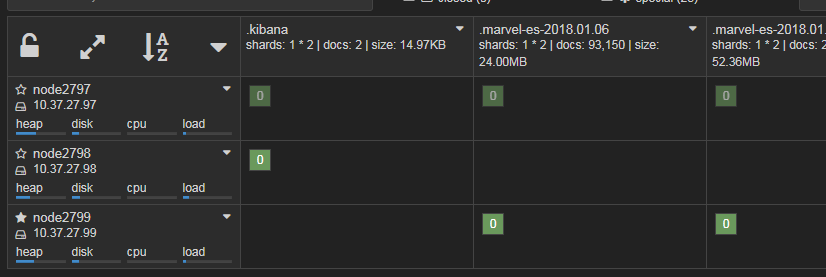

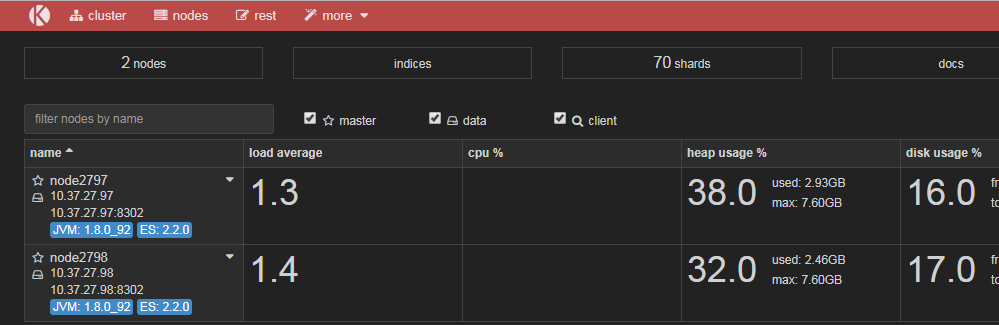

一开始都没有问题,但是当我重启到master这个节点 (如下图的node2799这个node) 的时候 集群直接不可用了(注意和状态为red不同,red 好歹你可以通过 _cluster/heal能看到,而这个状态是所有请求都没有响应阻塞住了,kopf也打不开了,直到重新选出主节点),直到一分钟后有新的master被选举出来,集群恢复yellow状态。我的集群是2.2.0版本的。

我想知道有没有办法能避免这个问题?毕竟有1分钟的服务不可用还是会有一些影响的。

有朋友推荐我用

index.unassigned.node_left.delayed_timeout = 0

这个配置,让集群在失去节点的时候 分片立即选主,但是我试过了 集群还是会不可用。 感觉不是分片的问题,因为我已经确认每个索引都有全量分片了,而且只有重启

----------------------------------------------------------------------------------------------------------------

补充一下现象,重启选举出的master的时候,集群处于这样的状态,无法访问索引:

而且剩余两个节点会抛出异常:

一开始都没有问题,但是当我重启到master这个节点 (如下图的node2799这个node) 的时候 集群直接不可用了(注意和状态为red不同,red 好歹你可以通过 _cluster/heal能看到,而这个状态是所有请求都没有响应阻塞住了,kopf也打不开了,直到重新选出主节点),直到一分钟后有新的master被选举出来,集群恢复yellow状态。我的集群是2.2.0版本的。

我想知道有没有办法能避免这个问题?毕竟有1分钟的服务不可用还是会有一些影响的。

有朋友推荐我用

index.unassigned.node_left.delayed_timeout = 0

这个配置,让集群在失去节点的时候 分片立即选主,但是我试过了 集群还是会不可用。 感觉不是分片的问题,因为我已经确认每个索引都有全量分片了,而且只有重启

----------------------------------------------------------------------------------------------------------------

补充一下现象,重启选举出的master的时候,集群处于这样的状态,无法访问索引:

而且剩余两个节点会抛出异常:

[2018-01-29 14:43:53,947][DEBUG][transport.netty ] [node2797] disconnecting from [{node2799}{SbMekoCpRSG0aJHEUTpcYw}{10.37.27.99}{10.37.27.99:8302}{zone=test}] due to explicit disconnect call

[2018-01-29 14:43:53,946][WARN ][discovery.zen.ping.unicast] [node2797] failed to send ping to [{node2799}{SbMekoCpRSG0aJHEUTpcYw}{10.37.27.99}{10.37.27.99:8302}{zone=test}]

RemoteTransportException[[node2799][10.37.27.99:8302][internal:discovery/zen/unicast]]; nested: IllegalStateException[received ping request while not started];

Caused by: java.lang.IllegalStateException: received ping request while not started

at org.elasticsearch.discovery.zen.ping.unicast.UnicastZenPing.handlePingRequest(UnicastZenPing.java:497)

at org.elasticsearch.discovery.zen.ping.unicast.UnicastZenPing.access$2400(UnicastZenPing.java:83)

at org.elasticsearch.discovery.zen.ping.unicast.UnicastZenPing$UnicastPingRequestHandler.messageReceived(UnicastZenPing.java:522)

at org.elasticsearch.discovery.zen.ping.unicast.UnicastZenPing$UnicastPingRequestHandler.messageReceived(UnicastZenPing.java:518)

at org.elasticsearch.transport.netty.MessageChannelHandler.handleRequest(MessageChannelHandler.java:244)

at org.elasticsearch.transport.netty.MessageChannelHandler.messageReceived(MessageChannelHandler.java:114)

at org.jboss.netty.channel.SimpleChannelUpstreamHandler.handleUpstream(SimpleChannelUpstreamHandler.java:70)

at org.jboss.netty.channel.DefaultChannelPipeline.sendUpstream(DefaultChannelPipeline.java:564)

at org.jboss.netty.channel.DefaultChannelPipeline$DefaultChannelHandlerContext.sendUpstream(DefaultChannelPipeline.java:791)

at org.jboss.netty.channel.Channels.fireMessageReceived(Channels.java:296)

at org.jboss.netty.handler.codec.frame.FrameDecoder.unfoldAndFireMessageReceived(FrameDecoder.java:462)

at org.jboss.netty.handler.codec.frame.FrameDecoder.callDecode(FrameDecoder.java:443)

at org.jboss.netty.handler.codec.frame.FrameDecoder.messageReceived(FrameDecoder.java:303)

at org.jboss.netty.channel.SimpleChannelUpstreamHandler.handleUpstream(SimpleChannelUpstreamHandler.java:70)

at org.jboss.netty.channel.DefaultChannelPipeline.sendUpstream(DefaultChannelPipeline.java:564)

at org.jboss.netty.channel.DefaultChannelPipeline$DefaultChannelHandlerContext.sendUpstream(DefaultChannelPipeline.java:791)

at org.elasticsearch.common.netty.OpenChannelsHandler.handleUpstream(OpenChannelsHandler.java:75)

at org.jboss.netty.channel.DefaultChannelPipeline.sendUpstream(DefaultChannelPipeline.java:564)

at org.jboss.netty.channel.DefaultChannelPipeline.sendUpstream(DefaultChannelPipeline.java:559)

at org.jboss.netty.channel.Channels.fireMessageReceived(Channels.java:268)

at org.jboss.netty.channel.Channels.fireMessageReceived(Channels.java:255)

at org.jboss.netty.channel.socket.nio.NioWorker.read(NioWorker.java:88)

at org.jboss.netty.channel.socket.nio.AbstractNioWorker.process(AbstractNioWorker.java:108)

at org.jboss.netty.channel.socket.nio.AbstractNioSelector.run(AbstractNioSelector.java:337)

at org.jboss.netty.channel.socket.nio.AbstractNioWorker.run(AbstractNioWorker.java:89)

at org.jboss.netty.channel.socket.nio.NioWorker.run(NioWorker.java:178)

at org.jboss.netty.util.ThreadRenamingRunnable.run(ThreadRenamingRunnable.java:108)

at org.jboss.netty.util.internal.DeadLockProofWorker$1.run(DeadLockProofWorker.java:42)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

[2018-01-29 14:43:53,947][DEBUG][cluster.service ] [node2797] processing [zen-disco-master_failed ({node2799}{SbMekoCpRSG0aJHEUTpcYw}{10.37.27.99}{10.37.27.99:8302}{zone=test})]: took 5ms done applying updated cluster_state (version: 404, uuid: PRd4yp30SKKm-KPgv7aWow)

[2018-01-29 14:43:55,155][DEBUG][action.admin.cluster.state] [node2797] no known master node, scheduling a retry

[2018-01-29 14:43:55,161][DEBUG][action.admin.indices.get ] [node2797] no known master node, scheduling a retry

[2018-01-29 14:43:55,162][DEBUG][action.admin.cluster.state] [node2797] no known master node, scheduling a retry

[2018-01-29 14:43:55,165][DEBUG][action.admin.cluster.health] [node2797] no known master node, scheduling a retry

[2018-01-29 14:44:19,878][DEBUG][indices.memory ] [node2797] recalculating shard indexing buffer, total is [778.2mb] with [2] active shards, each shard set to indexing=[389.1mb], translog=[64kb]

[2018-01-29 14:44:23,945][DEBUG][transport.netty ] [node2797] connected to node [{#zen_unicast_4_CyzI-D1KQj6UlM6k2pP43A#}{10.37.27.99}{10.37.27.99:8302}{zone=test}]

[2018-01-29 14:44:23,945][DEBUG][transport.netty ] [node2797] connected to node [{#zen_unicast_3#}{10.37.27.99}{10.37.27.99:8302}]

[2018-01-29 14:44:25,156][DEBUG][action.admin.cluster.state] [node2797] timed out while retrying [cluster:monitor/state] after failure (timeout [30s])

[2018-01-29 14:44:25,157][INFO ][rest.suppressed ] /_cluster/state/master_node,routing_table,blocks/ Params: {metric=master_node,routing_table,blocks}

MasterNotDiscoveredException[null]

at org.elasticsearch.action.support.master.TransportMasterNodeAction$AsyncSingleAction$5.onTimeout(TransportMasterNodeAction.java:205)

at org.elasticsearch.cluster.ClusterStateObserver$ObserverClusterStateListener.onTimeout(ClusterStateObserver.java:239)

at org.elasticsearch.cluster.service.InternalClusterService$NotifyTimeout.run(InternalClusterService.java:794)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

[2018-01-29 14:44:25,162][DEBUG][action.admin.indices.get ] [node2797] timed out while retrying [indices:admin/get] after failure (timeout [30s])

[2018-01-29 14:44:25,162][INFO ][rest.suppressed ] /_aliases Params: {index=_aliases}

MasterNotDiscoveredException[null]

at org.elasticsearch.action.support.master.TransportMasterNodeAction$AsyncSingleAction$5.onTimeout(TransportMasterNodeAction.java:205)

at org.elasticsearch.cluster.ClusterStateObserver$ObserverClusterStateListener.onTimeout(ClusterStateObserver.java:239)

at org.elasticsearch.cluster.service.InternalClusterService$NotifyTimeout.run(InternalClusterService.java:794)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

[2018-01-29 14:44:25,163][DEBUG][action.admin.cluster.state] [node2797] timed out while retrying [cluster:monitor/state] after failure (timeout [30s])

[2018-01-29 14:44:25,164][INFO ][rest.suppressed ] /_cluster/settings Params: {}

MasterNotDiscoveredException[null]

at org.elasticsearch.action.support.master.TransportMasterNodeAction$AsyncSingleAction$5.onTimeout(TransportMasterNodeAction.java:205)

at org.elasticsearch.cluster.ClusterStateObserver$ObserverClusterStateListener.onTimeout(ClusterStateObserver.java:239)

at org.elasticsearch.cluster.service.InternalClusterService$NotifyTimeout.run(InternalClusterService.java:794)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

[2018-01-29 14:44:25,166][DEBUG][action.admin.cluster.health] [node2797] timed out while retrying [cluster:monitor/health] after failure (timeout [30s])

[2018-01-29 14:44:25,166][INFO ][rest.suppressed ] /_cluster/health Params: {}

MasterNotDiscoveredException[null]

at org.elasticsearch.action.support.master.TransportMasterNodeAction$AsyncSingleAction$5.onTimeout(TransportMasterNodeAction.java:205)

at org.elasticsearch.cluster.ClusterStateObserver$ObserverClusterStateListener.onTimeout(ClusterStateObserver.java:239)

at org.elasticsearch.cluster.service.InternalClusterService$NotifyTimeout.run(InternalClusterService.java:794)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

3 个回复

kennywu76 - Wood

赞同来自: kwan

如果你重启master后整个集群不可用了,可能是其他问题引起的,比如集群是否有非常多的索引? 对于规模不是很大的集群,默认的设置,master的切换大概在3秒以内完成。 如果耗时1分钟才选举出master,有可能是discovery.zen.ping_timeout这个参数被更改过了; 或者是集群规模非常大,同步状态数据非常慢造成新的master选举很慢。

另外鉴于你集群的版本比较低,也不排除受到早期一些版本bug的影响。 起码2.x某个版本是存在master内存泄漏问题的,有可能的话还是升级到2序列的最新版。

simooge - 80后IT男

赞同来自:

shjdwxy

赞同来自: