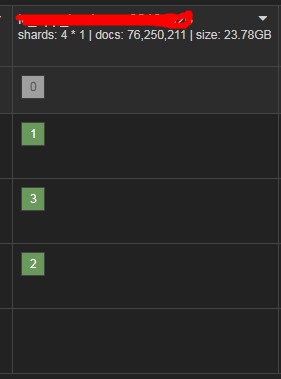

我有一个索引,已经创建好了然后写了好几款一个小时的数据,下午的时候突然一个主分片没了:

机器没有crash,说明是分片自己的问题

看了下unsinged.reason 是 ALLOCATION_FAILED分配失败。

然后看了下es的日志,发现了这么一段:

看起来这意思像是merge的时候出了点问题导致分片损坏了,就自己关闭了,但是并不知道具体为什么会这样

在 /data0/esdata/es-logcluster/nodes/0/indices/lc_app_business-20181026/0/index 目录下能找到一个文件叫corrupted_34ejg-QUR6e3bR_12dB6vQ,说的其实也是上面那个Lucene的异常信息。

不知道数据是否能恢复?而且也找不到merge failed的原因呢

机器没有crash,说明是分片自己的问题

看了下unsinged.reason 是 ALLOCATION_FAILED分配失败。

然后看了下es的日志,发现了这么一段:

[2018-10-26 14:49:42,794][ERROR][index.engine ] [node2783] [lc_app_business-20181026][0] failed to merge

org.apache.lucene.index.CorruptIndexException: checksum failed (hardware problem?) : expected=53c9e2f7 actual=ceaff7a4 (resource=BufferedChecksumIndexInput(NIOFSIndexInput(path="/data0/esdata/es-logcluster/nodes/0/indices/lc_app_business-20181026/0/index/_16l.cfs") [slice=_16l_Lucene50_0.tim]))

[2018-10-26 14:49:42,855][DEBUG][index.translog ] [node2783] [lc_app_business-20181026][0] translog closed

[2018-10-26 14:49:42,855][DEBUG][index.engine ] [node2783] [lc_app_business-20181026][0] engine closed [engine failed on: [merge failed]]

[2018-10-26 14:49:42,855][WARN ][index.engine ] [node2783] [lc_app_business-20181026][0] failed engine [merge failed]

org.apache.lucene.index.MergePolicy$MergeException: org.apache.lucene.index.CorruptIndexException: checksum failed (hardware problem?) : expected=53c9e2f7 actual=ceaff7a4 (resource=BufferedChecksumIndexInput(NIOFSIndexInput(path="/data0/esdata/es-logcluster/nodes/0/indices/lc_app_business-20181026/0/index/_16l.cfs") [slice=_16l_Lucene50_0.tim]))

Caused by: org.apache.lucene.index.CorruptIndexException: checksum failed (hardware problem?) : expected=53c9e2f7 actual=ceaff7a4 (resource=BufferedChecksumIndexInput(NIOFSIndexInput(path="/data0/esdata/es-logcluster/nodes/0/indices/lc_app_business-20181026/0/index/_16l.cfs") [slice=_16l_Lucene50_0.tim]))

[2018-10-26 14:49:42,866][DEBUG][index ] [node2783] [lc_app_business-20181026] [0] closing... (reason: [engine failure, reason [merge failed]])

[2018-10-26 14:49:42,869][DEBUG][index.shard ] [node2783] [lc_app_business-20181026][0] state: [STARTED]->[CLOSED], reason [engine failure, reason [merge failed]]

[2018-10-26 14:49:42,869][DEBUG][index.shard ] [node2783] [lc_app_business-20181026][0] operations counter reached 0, will not accept any further writes

[2018-10-26 14:49:42,869][DEBUG][index.store ] [node2783] [lc_app_business-20181026][0] store reference count on close: 0

[2018-10-26 14:49:42,869][DEBUG][index ] [node2783] [lc_app_business-20181026] [0] closed (reason: [engine failure, reason [merge failed]])看起来这意思像是merge的时候出了点问题导致分片损坏了,就自己关闭了,但是并不知道具体为什么会这样

在 /data0/esdata/es-logcluster/nodes/0/indices/lc_app_business-20181026/0/index 目录下能找到一个文件叫corrupted_34ejg-QUR6e3bR_12dB6vQ,说的其实也是上面那个Lucene的异常信息。

不知道数据是否能恢复?而且也找不到merge failed的原因呢

6 个回复

rockybean - Elastic Certified Engineer, ElasticStack Fans,公众号:ElasticTalk

赞同来自:

没设置副本?

laoyang360 - 《一本书讲透Elasticsearch》作者,Elastic认证工程师 [死磕Elasitcsearch]知识星球地址:http://t.cn/RmwM3N9;微信公众号:铭毅天下; 博客:https://elastic.blog.csdn.net

赞同来自:

zqc0512 - andy zhou

赞同来自:

万一硬盘出点问题就傻了……

lvwendong

赞同来自:

kr9226 - 我愿意一步一步走向我想要的世界

赞同来自:

bingo919 - bingo

赞同来自:

[2020-12-26T01:38:22,612][WARN ][o.e.i.c.IndicesClusterStateService] [bd_lass_elasticsearch-data0] [[app_2020.12.23-shrink][0]] marking and sending shard failed due to [shard failure, reason [recovery]]

org.apache.lucene.index.CorruptIndexException: checksum failed (hardware problem?) : expected=f5dvwg actual=1ukjrs4 (resource=name [_1_Lucene50_0.pos], length [88425081], checksum [f5dvwg], writtenBy [7.7.0]) (resource=VerifyingIndexOutput(_1_Lucene50_0.pos))

at org.elasticsearch.index.store.Store$LuceneVerifyingIndexOutput.readAndCompareChecksum(Store.java:1212) ~[elasticsearch-6.8.0.jar:6.8.0]

at org.elasticsearch.index.store.Store$LuceneVerifyingIndexOutput.writeByte(Store.java:1190) ~[elasticsearch-6.8.0.jar:6.8.0]

at org.elasticsearch.index.store.Store$LuceneVerifyingIndexOutput.writeBytes(Store.java:1220) ~[elasticsearch-6.8.0.jar:6.8.0]

at org.elasticsearch.indices.recovery.MultiFileWriter.innerWriteFileChunk(MultiFileWriter.java:120) ~[elasticsearch-6.8.0.jar:6.8.0]

at org.elasticsearch.indices.recovery.MultiFileWriter.access$000(MultiFileWriter.java:43) ~[elasticsearch-6.8.0.jar:6.8.0]

at org.elasticsearch.indices.recovery.MultiFileWriter$FileChunkWriter.writeChunk(MultiFileWriter.java:200) ~[elasticsearch-6.8.0.jar:6.8.0]

at org.elasticsearch.indices.recovery.MultiFileWriter.writeFileChunk(MultiFileWriter.java:68) ~[elasticsearch-6.8.0.jar:6.8.0]

at org.elasticsearch.indices.recovery.RecoveryTarget.writeFileChunk(RecoveryTarget.java:459) ~[elasticsearch-6.8.0.jar:6.8.0]

at org.elasticsearch.indices.recovery.PeerRecoveryTargetService$FileChunkTransportRequestHandler.messageReceived(PeerRecoveryTargetService.java:629) ~[elasticsearch-6.8.0.jar:6.8.0]

at org.elasticsearch.indices.recovery.PeerRecoveryTargetService$FileChunkTransportRequestHandler.messageReceived(PeerRecoveryTargetService.java:603) ~[elasticsearch-6.8.0.jar:6.8.0]

at org.elasticsearch.transport.TransportRequestHandler.messageReceived(TransportRequestHandler.java:30) ~[elasticsearch-6.8.0.jar:6.8.0]

at org.elasticsearch.transport.RequestHandlerRegistry.processMessageReceived(RequestHandlerRegistry.java:66) ~[elasticsearch-6.8.0.jar:6.8.0]

at org.elasticsearch.transport.TcpTransport$RequestHandler.doRun(TcpTransport.java:1087) ~[elasticsearch-6.8.0.jar:6.8.0]

at org.elasticsearch.common.util.concurrent.ThreadContext$ContextPreservingAbstractRunnable.doRun(ThreadContext.java:751) ~[elasticsearch-6.8.0.jar:6.8.0]

at org.elasticsearch.common.util.concurrent.AbstractRunnable.run(AbstractRunnable.java:37) ~[elasticsearch-6.8.0.jar:6.8.0]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) [?:1.8.0_232]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) [?:1.8.0_232]

at java.lang.Thread.run(Thread.java:748) [?:1.8.0_232]

[2020-12-26T22:56:07,517][INFO ][o.e.m.j.JvmGcMonitorService] [bd_lass_elasticsearch-data0] [gc][828469] overhead, spent [348ms] collecting in the last [1s]